Fourier transform, convolution and D2NN

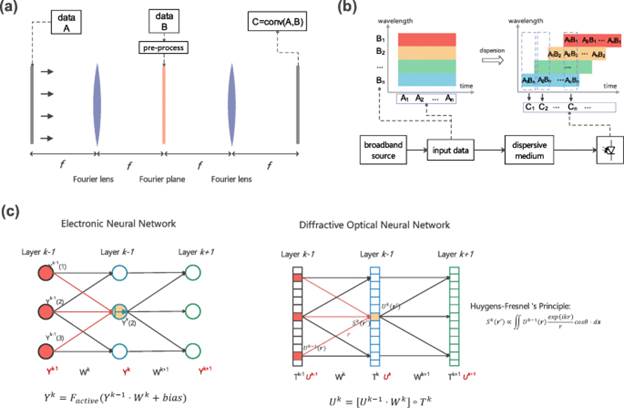

VMM is a universal operator which can be used to do complex computing tasks, such as FT and convolution, with consuming more clock cycles. However, these complex computing tasks can be accomplished in one ‘clock cycle’ by adopting the inherent parallelism of photons. Theoretically, the process of coherent light wave deformed by an ideal lens and the process of FT can be equivalent. Based on this concept, a 4F system (Fig. 2(a)) can be used to do convolution processing. Since convolution is the heaviest burden in a CNN, Wetzstein et al. made a good attempt on exploring in the optical-electrical hybrid CNN based on the 4F system. The weights of the trained CNN network have been loaded on several passive phase masks by elaborately designing the effective point spread function of the 4F system. The 90%, 78% and 45% accuracy have been achieved in the classification of MNIST, QuickDraw and CIFAR-10 standard datasets, respectively. Recently, Sorger et al. demonstrated that the optical-electrical hybrid CNN still works well if the phase information in the Fourier filter plane is abandoned. In Sorger’s demo, the weights of CNN have been directly loaded with the amplitude via a high speed DMD in the filter plane. However, it is disputable in theory that the amplitude-only filter can achieve the 98% and 54% classification accuracy of MNIST and CIFAR-10.

Fig. 2

Complex matrix manipulation in optical computing. a 4F system. Two gray bars represent input data (A) and convolution results (C). The convex lens is Fourier lens that implements Fourier transform. The orange bar represents a matrix. b Schematic of optical convolution processor based on dispersion effect. c Schematic of diffractive deep neural networks with multi-layers of passive diffractive planes .

There are other alternative ways to realize FT and convolution in optical apart from the 4F based schemes mentioned above. Since the conventional lens is a bulky device, several types of effective lens, such as gradient index technology, meta-surface and diffraction structure by inverse designed, are considered as alternative devices to implement FT due to their miniaturized feature . However, the accuracy of computing based on these novel approaches has not yet been exploited fully. Besides the ways of effective lens, an integrated optical fast Fourier transform (FFT) approach based on silicon photonics has been also proposed by Sorger et al. . In this paper, a systematic analysis of the speed and the power consuming has been given, and the advantages of integrated optical FFT comparing with P100 GPU (Graphics processing unit) have been figured out.

Apart from the implements of FT based on Fourier lens in space domain, the FT can be implemented in time domain with considering serial data inputting. The dispersion effect, caused by the propagation of multi-wavelength light in a dispersion medium, has been treated as the ‘time lens’ to achieve FT process in . Recently this scheme is further used for the CNN co-processing via loading weights data and feature map data in wavelength domain and time domain, respectively. As shown in Fig. 2(b), the data rectangle is deformed to a shear form since the spectrum disperses in a dispersive medium, and the convolution results are finally detected by using a wide spectrum detector. In Ref. , an effective performance of ~ 5.6 TMAC/s and 88% accuracy for MNIST recognition have been achieved by utilizing time, wavelength and space dimensions enabled by an integrated microcomb source simultaneously.

In 2018, Ozcan et al. proposed a new network called diffractive deep neural networks (D2NN) for optical machine learning. This optical network comprises multiple diffractive layers, where each point on a given layer acts as a neuron, with a complex-valued transmission coefficient. According to the Huygens-Fresnel’ principle, the behavior of wave propagation can be seen as a full connection network of these neurons (Fig. 2(c)). Although the activation layer has not been implemented, the experimental testing at 0.4 THz has demonstrated a quite good result, 91.75% and 81.1% classification accuracy for MNIST and Fashion-MNIST, respectively. One year later, the numerical work has shown the accuracy has been improved to 98.6% and 91.1% for the MNIST and Fashion-MNIST dataset, respectively. Moreover, that work also has demonstrated 51.4% accuracy for grayscale CIFAR-10 datasets . Besides, the classification for MNIST and CIFAR, the modified D2NN’s ability has also been proved for salient object detection (numerical result, 0.726 F-measurement for video sequence) and human action recognition (> 96% experimental accuracy for the Weizmann and KTH databases) .