Object detection for self-driving cars – Part 4

Tackling Multiple Detection

Threshold Filtering

The YOLO object detection algorithm will predict multiple overlapping bounding boxes for a given image. As not all bounding boxes contain the object to be classified (e.g. pedestrian, bike, car or truck) or detected, we need to filter out those bounding boxes that don’t contain the target object. To implement this, we monitor the value of pc, i.e., the probability or confidence of an object (i.e. the four classes) being present in the bounding box. If the value of pc is less than the threshold value, then we filter out that bounding box from the predicted bounding boxes. This threshold may vary from model to model and serve as a hyper-parameter for the model.

If predicted target variable is defined as:

(6)y^=[pcbxbybhbwc1c2…c8]T

then discard all bounding boxes where the value of pc < threshold value. The following code implements this approach.

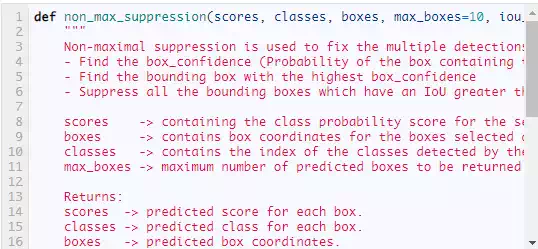

Non-max Suppression

Even after filtering by thresholding over the classes score, we may still end up with a lot of overlapping bounding boxes. This is because the YOLO algorithm may detect an object multiple times, which is one of its drawbacks. A second filter called non-maximal suppression (NMS) is used to remove duplicate detections of an object. Non-max suppression uses ‘Intersection over Union’ (IoU) to fix multiple detections.

Non-maximal suppression is implemented as follows:

· Find the box confidence (pc) (Probability of the box containing the object) for each detection.

· Pick the bounding box with the maximum box confidence. Output this box as prediction.

· Discard any remaining bounding boxes which have an IoU greater than 0.5 with the bounding box selected as output in the previous step i.e. any bounding box with high overlap is discarded.

In case there are multiple classes/ objects, i.e., if there are four objects/classes, then non-max suppression will run four times, once for every output class.

Anchor boxes

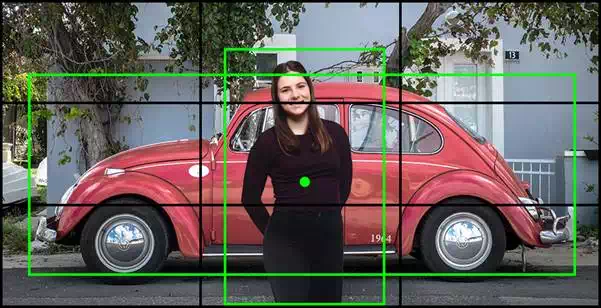

One of the drawbacks of YOLO algorithm is that each grid can only detect one object. What if we want to detect multiple distinct objects in each grid. For example, if two objects or classes are overlapping and share the same grid as shown in the image (see Fig 4.),

Fig 4. Two Overlapping bounding boxes with two overlapping classes.

We make use of anchor boxes to tackle the issue. Let’s assume the predicted variable is defined as

(7)y^=[pcbxbybhbwc1c2…c8]T

then, we can use two anchor boxes in the following manner to detect two objects in the image simultaneously.

Fig 5. Target variable with two bounding boxes

Earlier, the target variable was defined such that each object in the training image is assigned to grid cell that contains that object’s midpoint. Now, with two anchor boxes, each object in the training images is assigned to a grid cell that contains the object’s midpoint and anchor box for the grid cell with the highest IOU. So, with the help of two anchor boxes, we can detect at most two objects simultaneously in an image. Fig 6. shows the shape of the final output layer with and without the use of anchor boxes.

Fig 6. Shape of the output layer with two anchor boxes

Although, we can detect multiple images using Anchor boxes, but they still have limitations. For example, if there are two anchor boxes defined in the target variable and the image has three overlapping objects, then the algorithm fails to detect all three objects. Secondly, if two anchor boxes are associated with two objects but have the same midpoint in the box coordinates, then the algorithm fails to differentiate between the objects. Now, that we know the basics of anchor boxes, let’s code it.

In the following code we will use 10 anchor boxes. As a result, the algorithm can detect at maximum of 10 objects in a given image.