Curve Fitting

Introduction Historians attribute the phrase regression analysis to Sir Francis Galton (1822-1911), a British anthropologist and meteorologist, who used the term regression in an address that was published in Nature in 1885. Galton used the term while talking of his discovery that offspring of seeds “did not tend to resemble their parent seeds in size, but to be always more mediocre [i.e., more average] than they.... The experiments showed further that the mean filial regression towards mediocrity was directly proportional to the parental deviation from it.” The content of Galton’s paper would probably be called correlation analysis today, a term which he also coined. However, the term regression soon was applied to situations other than Galton’s and it has been used ever since. Regression Analysis refers to the study of the relationship between a response (dependent) variable, Y, and one or more independent variables, the X’s. When this relationship is reasonably approximated by a straight line, it is said to be linear, and we talk of linear regression. When the relationship follows a curve, we call it curvilinear regression. Usually, you assume that the independent variables are measured exactly (without random error) while the dependent variable is measured with random error. Frequently, this assumption is not completely true, but when it cannot be justified, a much more complicated fitting procedure is required. However, if the size of the measurement error in an independent variable is small relative to the range of values of that variable, least squares regression analysis may be used with legitimacy.

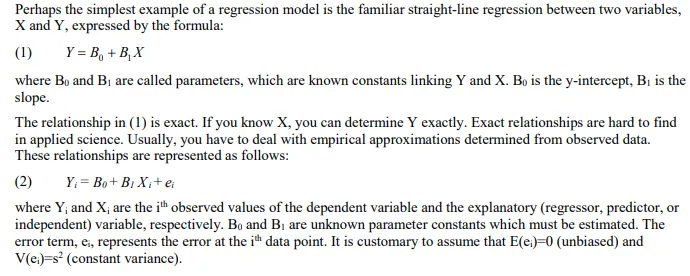

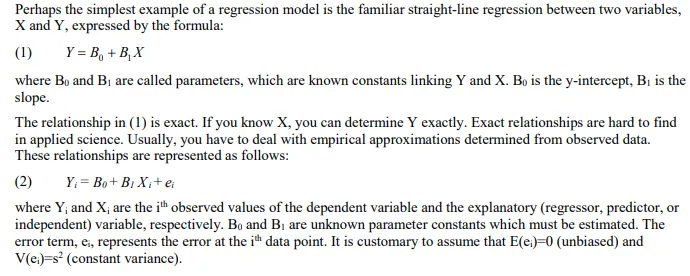

Linear Regression Models

Nonlinear Regression Models

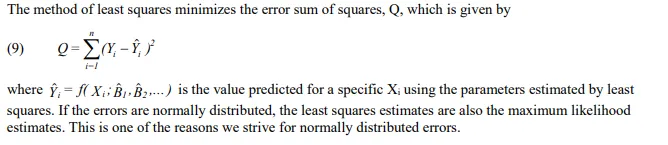

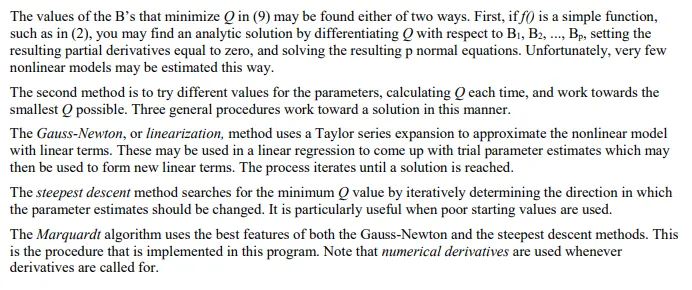

Least Squares Estimation of Nonlinear Models