Unmanned Aerial Systems

The field of the micro electro‐mechanical system (MEMS) technology enables small and lightweight, yet accurate inertial measurement units (IMUs), inertial and magneto‐resistive sensors. The latest technology in high‐density power storage offers high‐density battery cells. Thus, allowing lightweight unmanned aerial systems (UASs) with increased flight time suitable for varying missions. So far, UASs have been used mainly for military applications, for reconnaissance but also in engagement. However, increasing attempts are being made to use such systems in non‐military scenarios. Within the ICARUS project, several UAS platforms are being developed to support human teams in disaster scenarios as part of an integrated set of unmanned search and rescue (SAR) systems. While there exist a wide range of aerial platforms equipped with basic functionality, the UASs need to be adapted with functions specific to the ICARUS framework and its scenarios.

The autonomous UASs for SAR applications are suited as remote sensors for mapping the disaster area, inspection of the local infrastructure, efficient localisation of people, and as tools for fast interaction with them. As an additional capability, the UAS tools will be complemented with a communication relay functionality to establish its own communication network in case the local infrastructure is broken. To fulfil these missions, the UAS systems will have to feature accurate on‐board localisation and control while still requiring minimum power. The power requirement is a crucial role for UASs in order to allow for sufficient operation time carrying the on‐board payload. The systems need to work autonomous with minimal human interaction, allowing human forces to be focused on other tasks. Furthermore, the project intends to provide solutions for adapting to unknown or even dynamically changing environments: as they operate close to people, it is necessary for the platforms to function robustly without hitting anything or anyone.

All necessary payloads for the SAR missions need to be identified and integrated with the platforms, while still meeting the tight specifications on endurance and manoeuverability. This consists of additional sensors and devices for flight autonomy and integration into the ICAURS framework.

UAS applications for SAR missions

SAR missions can benefit from the use of UASs in many distinct ways. Their unique ability to reach higher altitudes from ground can be useful to provide means, other robots or humans on ground can never achieve. Within the ICARUS project, five distinct functionalities of UASs are proven to be useful

1. Mapping/sectorisation: A UAS can provide operators on‐site and even higher level coordinators with valuable aerial information over an extended period of time and at a large scale in an efficient period of time. This information can be used for planning and risk assessment. A direct video stream to the operator can give them valuable live information about the situation. Furthermore, the aerial data can be processed to build a 3D map of the scanned area or building.

2. Victim search: Search for victims is one of the key elements of the ICARUS system. Using to a large extent the on‐board thermal cameras, a large area can be scanned and potential victim locations are forwarded to the operator automatically to be verified.

3. Target observation: The UAS provides the operator/coordinators with a pair of remote eyes in the sky. A specified location can be watched for an extended period of time without having to care about low‐level control of the UAS. For the long‐endurance UAS intrinsically incapable of keeping the camera view constant over time, a circling of the target will be the default procedure. The target to be observed can also involve assessment of the structural integrity of buildings both from the outside or the inside. In addition, the captured and registered images can also be used for mapping and sectorisation.

4. Delivery: Items possibly useful to be delivered by the UAS consist of, for example, bottles of water, medical packages and potentially inflatable buoyancy aids. The UAS must be capable of carrying and deploying those items to a user‐specified location.

5. Communication relay: In a distributed network of robotic and human agent, communication plays a key role. UASs naturally offer good visibility to a large area and are thus suited for acting as communication relay when integrated in a common network. This functionality differentiates itself from the previous ones, since the request for acting as a communication relay will typically be received when UAS platforms are on other missions (but it is of course not limited to this situation). In this case, the prioritisation of the communication relaying (i.e. possible interruption/relocation) over the current mission is handled by the coordinator or the UAS operator. A flight plan may be suggested automatically providing coverage necessary for performing the relaying task.

In order to provide efficient tools for the defined functionalities, a set of heterogeneous UASs have been developed. The goal of all UAS platforms is to efficiently gather information about the disaster area and possible victims, to provide initial life support and interaction with victims, assist the SAR teams and ground robots with additional information, and acting as communication relay in case the local infrastructure is broken. During a SAR mission, different level of precision of the provided information is needed, which leads to the developed platforms with different types of area coverage and speeds.

The first platform is a long‐endurance fixed‐wing UASs, as shown in the top image of Figure 1. This UAS flies at low altitudes in the airspace of 150–200 m above the ground. It can provide aerial images both from visual and thermal cameras. With its long endurance ranging from several hours up to a few days and its autonomous capabilities, the long‐endurance UAS is able to cover large areas in a short period of time. Thus, it is most useful in the planning phase of the SAR teams. It can be started at the base of operation (BOO) before reaching the disaster side with the SAR team. Its main purpose is to complement the aerial images gathered from satellites for a first assessment of the disaster side including pointing at potential positions of victims. It has a fast deployment time of a few hours and due to its hand launch capability and autonomy it is easy to operate. The (potential fleet of) long‐endurance UAS can be given a scan area to cover by aerial images. It will autonomously scan the area and return to the BOO to deliver the image data for further processing. This information can be used as a first overview of the level of destruction at the disaster side and as an assessment of the local infrastructure, for example, the roads leading to the disaster side for moving to and installing the forward base of operation (FBOO). Furthermore, this UAS is an ideal candidate to act as a communication relay with its high ground clearance, connecting the different SAR teams at a further stage will still providing aerial information.

FIGURE 1.

UAS fleet within ICARUS. Top: AtlantikSolar from ETH. Bottom left: AROT from EURECAT. Bottom right: Indoor multirotor from Skybotix (source: ICARUS consortium).

The second platform is a large outdoor quadrotor shown in the bottom left image of Figure 1. This UAS is used for detailed observation and for gathering thermal and visual aerial imageries at low altitudes up to 100 m above the ground. It is packed within a compact box and can be transported to and used at the FBOO or be used during displacement of the SAR team. It is able to operate autonomously while mapping in detail a given area or it can be directly piloted over the remote command and control system (RC2) if the operator wants to assess specific details. It is able to map a short area of interest with a much higher resolution than the fixed‐wing UAS. Furthermore, due to the lower altitude it is able to confirm the possible locations of victims found by the fixed‐wing UAS and search for and detect additional victims in that area. Since the quadrotor is able to hover it is suited to map walls of buildings for structural integrity checks. Furthermore, it permits to interact with victims to assess their health and with the help of the integrated delivery system to provide water or first aid kits to them.

The last platform is the indoor multirotor, as shown in the bottom right of Figure 1. This multirotor with a small footprint can be used to inspect buildings from the inside whose structural integrity might be compromised. In comparison to the unmanned ground vehicles (UGVs), the aerial multirotor has the advantage of easily accessing the buildings without being blocked by possible debris inside, as long as there is aerial clearance. Furthermore, it can easily climb different floors for inspection. This UAS assists the SAR teams to assess the structural properties and mapping of the building without risking the live of a human rescue team member. As there is in general no map of the inside available and there might be some debris blocking the paths, the UAS must be piloted by an operator over the RC2, while a video stream is fed back to enable a first person view (FPV) flight. Since the operator will not have a complete oversight of the path, the UAS needs to be aware of potential obstacles and avoid them if necessary.

All those platforms have a very distinctive purpose and complement each other to from a complete set of aerial robots for different possible SAR scenarios. They operate at different locations and altitudes for airspace separation and collision avoidance between the UAS.

UAS mechanical design

As discussed, the UAS fleet with its sensors can provide a great deal of situational awareness for the SAR teams. There exists a great variety of available drones in the market. However, they still have just limited autonomy due to the limited sensor capabilities and thus need a constant supervision of a trained pilot. The main task within the ICARUS project are thus to extend the autonomy and situational awareness of the systems to help the SAR teams within their missions with limited human interaction.

Visual sensor payload

Since all the platforms have similar requirements for the visual sensor payload, a common visual sensor payload was chosen. This reduces overall development and maintenance. This visual inertial (VI) sensor (see Figure 2) combines visual information from up to four visual and/or thermal cameras with the information from the inertial measurement unit (IMU). This forms a complete measurement set for vision‐based mapping and localisation. Since the resolution and weight constraints are not yet met by the thermal camera development in WP 210, an FLIR Tau 2 thermal camera is used over all platforms. The sensors are hardware synchronised for tight fusion in the image processing algorithms. It is suitable for sensing and performing on‐board processing for simultaneous localisation and mapping (SLAM) as well as application‐oriented algorithms (cartography, victim detection). The core consists of a field programmable gait array (FPGA) for visual pre‐processing as well as an processing unit (Kontron COM express module) that can be exchanged and allows for the use of standard tools (e.g. install a standard Linux operating system and run the vision algorithms). The unit was designed for low‐power and low‐weight constraints such as use on‐board small UAS. The mass amounts to 150 g, while the power consumption is of around 10–15 W.

FIGURE 2.

VI sensor hardware with two visual cameras and mounted IMU (source: ICARUS consortium).

The sensor unit can be used for accurate pose estimation and mapping at real‐time in all six dimensions (position and orientation). The integrated FPGA can provide raw visual data as well as pre‐processed visual data such as visual keypoints used for SLAM and mapping. The team of the swiss federal institute of technology in Zurich (ETHZ) has verified the mapping capabilities of the VI sensor in GPS‐denied environments and thorough analysis may be found in Ref.

The raw and processed information from the VI sensor can be used for the mapping, victim search, as well as for the target observation algorithms. Furthermore, increased control performance can be achieved using the improved pose estimation.

ATLANTIKSOLAR

AtlantikSolar from ICARUS partner ETHZ was chosen as the fixed‐wing long‐endurance UAS. It is a small and lightweight solar‐powered UAS. Concerning solar UAS, prototypes of different sizes and wingspans have been successfully operated by NASA, Quinetiq, Airbus, and others. The integrated solar technology increases the overall flight time to even perpetual flights. This makes such UAS a perfect candidate for extended information gathering of large‐scale areas, and acting as an airborne communication relay.

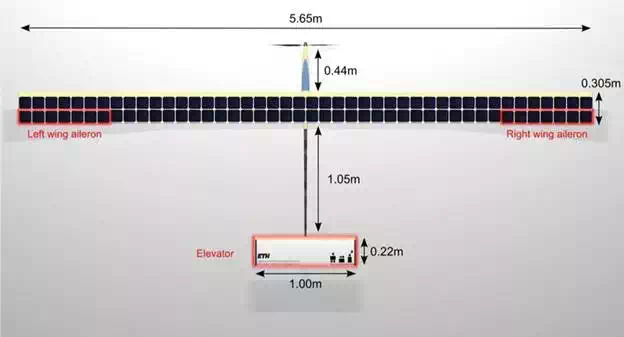

The difference between the AtlantikSolar UAS (as shown in Figure 3) compared to others is its capability to fly at low altitudes and its transportation and fast deployment capabilities due to its small size and weight. The UAS has a wingspan of 5.6 m and an overall weight of 7.5 kg. Due to the small weight, it can be launched by hand and thus can be deployed at almost all wide open locations without the need of an intact airstrip. The main wing consists of three parts, which can be disassembled for transportation. The ribs of the main wing are built out of balsa wood. These profile giving structures are interconnected by a spar made out carbon‐fibre tubes, which runs from wingtip to wingtip. This results in a lightweight structure and allows the transportation of the necessary battery packs with a total weight of 3.5 kg and with capacity of 34.5 Ah at a nominal voltage of 21.6 V. The batteries are stored within the carbon‐fibre tubes throughout the main wing. The whole top surface of the main wing is used to embed solar modules. Flying at a nominal speed of 9.5 m/s it is capable of flying up to 10 days at appropriate weather conditions. The nominal power usage is around 60 W while generating up to 250 W at noon with the solar panels. The complete specifications can be found in Ref. [2]. The long‐endurance capability was shown with a successful flight demonstration to break a new world record of over 81 hours continuous flight by travelling 2316 km in July 2015 in Switzerland.

FIGURE 3.

Specification of the AtlantikSolar UAS (source: ICARUS consortium).

The UAS autopilot is based on the open‐source Pixhawk project, which was adapted for autonomous waypoint‐based navigation. It is equipped with a sensor suite consisting of an inertial measurement unit (IMU), a magnetometer for determining the heading of the UAS, a global position system (GPS) device, a pitot tube measuring the airspeed and a static pressure sensor. Those sensors can be fused together to estimate the pose of the UAS at all times as described in Ref. An additional sensor payload (SensorPod) has been mounted at the wing and incorporates the VI sensor together with the communication system. The sensor payload consists of an atom motherboard with the Linux operating system running ROS. The ROS interface was used for running the vision algorithm as well as communicating over the adapted joint architecture for unmanned system (JAUS) protocol within the ICAURS mesh.

As depicted in Figure 4, the operator may control the UAS either directly by radio control or by the ground control station (GCS). The manual control is intended for safety reasons only, in normal operation, the UAS can be controlled over the GCS by providing a flight path. Two different long‐range communication channels are integrated for the data link to ensure connectivity throughout the whole operation. A high‐bandwidth link using the 5 GHz Wi‐Fi technology for the camera transmissions, as well as a long‐range low‐bandwidth communication device for control and operation of the UAS.

FIGURE 4.

Communication structure of the AtlantikSolar UAS (source: ICARUS consortium).

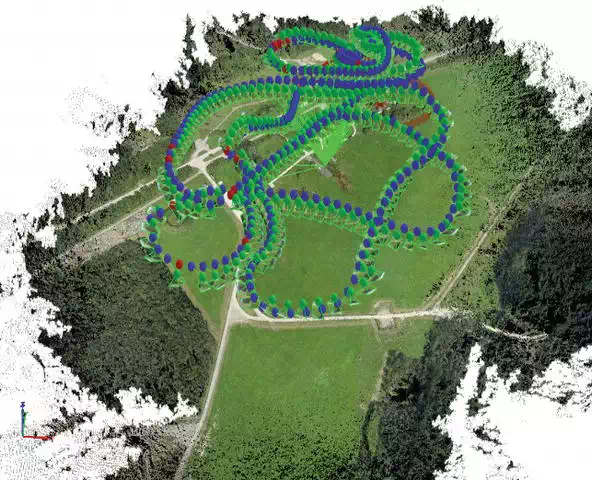

Towards complete autonomy, the AtlantikSolar UAS implements path‐planning algorithms that address the problem of area coverage and exploration. The algorithm is tackling the problem of covering a large area, taking into account the dynamics of a fixed‐wing UAS together with a fixly mounted camera. The implemented algorithm is based on sampling‐based methods and is able to search for the optimal path that ensures full exploration of a given area in minimum time. The implemented framework respects the non‐holonomic constraints of the vehicle. Results and detailed explanations can be found in Ref. The framework has been successfully tested in multiple cases including the ICARUS public field trials in Barcelona/CTC and Marche‐en‐Famenne in Belgium. The results can be seen in Figure 5, where the generated map of the Marche‐en‐Famenne trial site is shown together with the optimised path for total area coverage. Thus, the operator only needs to provide a desired area to be covered, while the UAS is planning fully autonomous the whole mission and returns for delivering the gathered information.

FIGURE 5.

Optimal path planning for the AtlantikSolar UAS. The path is optimal to cover the trial site in Marche‐en‐Famenne. The images are used to form the shown map (source: ICARUS consortium).

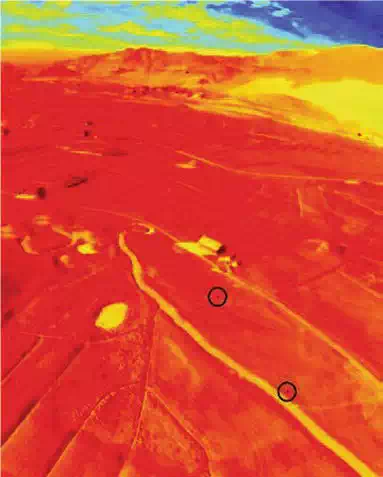

Finally, the UAS is able to detect possible locations of the human victims using both the thermal and visual camera information provided by the VI sensor (see Figure 6). Since the human body temperature is generally higher than the surrounding, the thermal imagery can be used to detect humans. Due to the restricted resolution of the thermal camera, the visual camera can be used to reference to found location. The algorithms work by subtracting the background from the thermal image and comparing the resulting regions of interest with known human features. A complete explanation of the algorithm can be found in Ref. The victims are tracked over time by the UAS, as long as they stay within the camera coverage, and their location is sent back and displayed at the RC2.

FIGURE 6.

Victim detection with the UAS. Possible locations of human bodies are marked in the picture and send to the RC2 (source: ICARUS consortium).

LIFT QUADROTOR

The lightweight and integrated platform for flight technologies (LIFT) coaxial‐quadrotor developed by EURECAT is a hovering UAS with vertical take‐off and landing capabilities (VTOL), capable of carrying out different tasks in the context of SAR operations. Quadrotor like the LIFT is a popular configuration along the VTOL UAS. Its design is suitable for robust hovering and omnidirectionality. Further, it can generate high torques on its main body for highly agile manoeuvres. The hovering capability and its ability to lift a certain payload of the Eurecat quadrotor complements the functionality of the AtlantikSolar UAS. It is able to fly closer to the ground for detailed mapping, lock and approach on potential victims as well as delivering goods to them. It can be controlled by the operator to give a steady aerial images feed due to his hover capability.

The LIFT (see Figure 7) is a large coaxial‐quadrotor with a weight of 4.3 kg. The length from shaft‐to‐shaft is 0.86 m and on each end are two propellers in a coaxial configuration. The propellers can lift a maximum weight of 9.6 kg. The main frame consists of carbon fibre tubes connected at the centre with the autopilot unit at top and a variable payload attachment at the bottom between the landing gear. The operational altitude is between 25 and 100 m above the ground. Two lithium polymer batteries with a capacity of 10 Ah at a nominal voltage of 21.6 V power the UAS. The flight time depends on the used payload and can be up to 30 minutes before replacing the batteries. It can be safely stored in a box to transport the UAS to the destination point.

FIGURE 7.

The Eurecat coaxial‐quadrotor with the camera payload (source: ICARUS consortium).

The UAS autopilot architecture is similar to that of the AtlantikSolar UAS. A pixhawk autopilot is combined with a netbook processor running Linux and the ROS operating systems for communication in the ICARUS mesh and for running the vision algorithms. The UAS is equipped with state of the art proprioceptive (accelerometers, gyroscopes, and altimeter) sensors used to stabilise the attitude of the UAS within the autopilot explained in Ref. Using the GPS measurements, the position of the UAS can be controlled. Additionally, the UAS is equipped with perceptive (VI sensor and range) sensors for enhanced state estimation. The platform can fly autonomously or to be piloted remotely. It has an advanced set of sensors to carry out different tasks in the context of SAR operations, such as first aid kit delivery, victims search outdoors, terrain mapping and reconnaissance. It has several communication links, starting from a radio control link to directly control the UAS. This link has an override mechanism to take over the UAS in the case of emergency. The primary communication link is used to send basic information between the GCS and the UAS such that that it can be monitored constantly. Further, the ICARUS communication link is incorporated inside the payload to connect to the ICAURS mesh using the Wi‐Fi connection. It can be operated manually up to fully autonomously following a desired path within the GCS or the RC2.

Using the VI sensor with the thermal camera as payload, LIFT is able to map small areas and buildings from the outside. Compared to the fixed‐wing UAS it can fly close to the buildings to gather detailed visual information. This information can be used to assess the structural integrity of the buildings. The operator can precisely guide the UAS to get to and lock on specific locations or targets for further investigation using the live video stream. The mapping capability was shown in the Marche‐en‐Famenne trial site where two buildings are entirely mapped, as shown in Figure 8.

FIGURE 8.

Map of two buildings from the Marche‐en‐Famenne trial site created by the Eurecat quadrotor (source: ICARUS consortium).

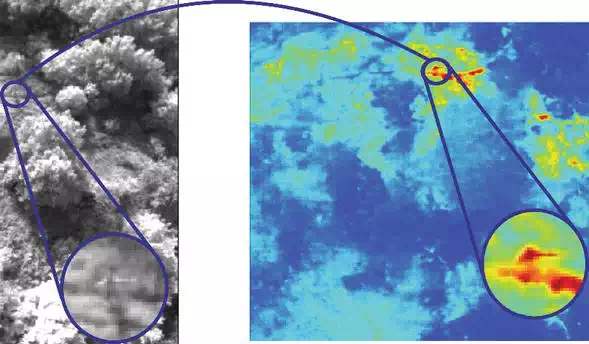

The visual and thermal camera can be used as well for victim detection. Detected victim positions can be sent to and displayed at the RC2. The aerial capability of the UAS is beneficial to detect humans within a forest or rubble field where humans or robots on ground can only move slowly. Compared to the fixed‐wing UAS, LIFT has the advantage to fly at lower altitudes, to hover at a certain point, and to move omnidirectional. Thus, it can cover an area in much more detail and from different specific views to increase the probability to find the victims. One of such potential scenarios is shown in Figure 9, where the UAS is capable of detecting a human in the forest partially covered by a tree. This also shows the advantage of using the thermal compared to the visual camera, where the victim is barely visible. The people detector takes advantage of histograms of oriented gradients (HOGs) using a sliding window approach in the images. One of the main reasons to use the HOG human detector is that it uses a ‘global’ feature to describe a person rather than a collection of ‘local’ features, that is, that the entire person is represented only by a single feature vector. Another important feature is that this classifier is already trained. However, the training data set is limited to some people postures and camera orientations. One drawback of the HOG detector is that people need to have a relative big size in the image (around 64 × 128 pixels), failing when a person occupies a small part of the image (a common situation for aerial images). For this reason, a region‐growing algorithm based on temperature blobs is implemented to search for victims.

FIGURE 9.

Victim detection using the Eurecat quadrotor. The victim lying in the forest and partially covered by a tree is found using the thermal images (source: ICARUS consortium).

Finally, the UAS payload can be exchanged for a delivery mechanism. The delivery mechanism is based on an electromagnet as a release mechanism to deliver the goods. Attaching a magnet to the delivery kit, it can be easily connected to the UAS. As soon as the UAS reaches the destination point, the operator can disable the electromagnet and deploy the kit, as shown in Figure 10. It is used to help injured but responsive victims by providing them with necessary supplies and treatment until the ground rescue teams arrive.

FIGURE 10.

Eurecat quadrotor delivering a first aid kit to an injured victim (source: ICARUS consortium).

SKYBOTIX MULTICOPTER

The skybotix multicopter is a hexacopter with a small footprint. As the LIFT, it is capable of VTOL flights and hovering. However, it is mainly used to inspect the inside of buildings. With its small footprint it is able to enter building by small openings like open windows or doors. The platform is chosen since it can hover robustly not being sensitive to wind and other disturbances, while still being able to fly through narrow passages. Capable of flying indoors, it can navigate and help analysing the structural integrity of the building from the inside and search for possible humans trapped inside the building.

The Skybotix multicopter, shown in Figure 11, is a modified version of the AscTec firefly with a weight of 1.4 kg including the additional sensor payload of 420 g. It has six propellers in a hexagonal configuration with a radius of 0.66 m and a height of 0.17 m. The soft propellers are used to not harm potential humans. The UAS has integrated some fault tolerance, since it can even fly with only five propellers. The propellers are connected with carbon fibre tubes to the centre of the main body. A battery is powering the UAS with a capacity of 4.9 Ah at a voltage of 12 V. It has a maximum flight time of 15 minutes before the battery needs to be replaced. The upper part of the main body encapsulates the proprioceptive sensor and microprocessor for control, whereas the below the VI sensor, communications, and an additional CPU are mounted for mapping and integration into the ICARUS mesh.

FIGURE 11.

Skybotix multicopter flying through a window for inspecting a building from the inside (source: ICARUS consortium).

The UAS can be flown manually over the dedicated remote control link or over the RC2. It has many different modes of operation starting from attitude stabilised mode to fully position assist mode. Since the indoor environment is not known a priory and the environment could be cluttered, an operator has to constantly fly the UAS by providing translational velocity commands in the position assist mode. A constant video stream provides a feedback for the operator. The basic idea of the obstacle avoidance is to constrain the commanded velocity vector of the UAS by overloading a repelling velocity. This is done by using a potential field around the obstacles generating a repelling velocity, which increases in magnitude while decreasing the distance between the UAS and the obstacle. The tracked velocity command is thus the average of the user set point together with all repelling velocities of all obstacles in the vicinity. Although the user commands can be given as velocity set points, the UAS itself is controlled in position to neglect drift in position as long as there is no user input to the system even in the presence of external disturbances such as wind gusts. A detailed explanation of the control can be found in Ref.

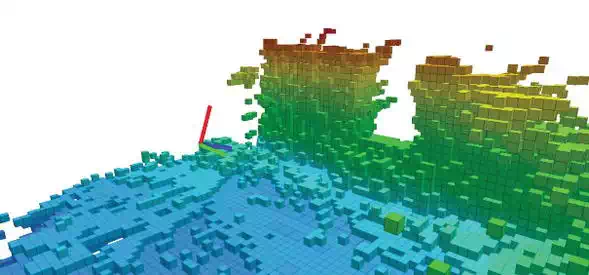

In order to perform obstacle avoidance and UAS navigation, the UAS is required to have a notion of its surrounding. To this end, the VI sensor is used to generate depth images, which are subsequently incorporated into a 3D voxelgrid. The corresponding depth image is estimated using a time‐synchronised stereo‐image pair from the VI sensor. A block‐matching algorithm is used to find correspondence objects in both image frames that can be triangulated knowing the baseline between both cameras. The depth images are only estimate once the UAS has moved to far outside the known terrain and a new pose key frame must be generated, to avoid short‐term drifts in the localisation and map generation and to reduce the computational burden. Using the pose key frame, the depth image can be transformed into a 3D point‐cloud. Since this point‐cloud can quickly become untrackable for large areas, the information must be compressed inside an efficient grid‐based map. Within this research, the OctoMap mapping framework is used which results in an environmental representation as shown in Figure 12. This representation used a resizable grid, where each node represents a probability of being occupied. All the node probabilities are updated by incorporating the new point‐cloud with its associated noise model uncertainty.

FIGURE 12.

OctoMap representation of the interior of a building generated by the Skybotix UAS (source: ICARUS consortium).

Conclusions

This chapter discussed the ICARUS unmanned aerial systems that were developed in order to assist human search and rescue workers, by providing them aerial systems which can fulfil multiple roles: act as an eye in the sky, perform large‐area or small‐area mapping and surveillance operations, detect remaining survivors outside, perform detailed 3D reconstructions for damage assessment and structural inspection, act as wireless repeaters, search for victims inside semi‐demolished buildings, drop rescue kits and floatation devices, … As it is impossible to fulfil all these roles with one single aircraft, three main platforms were developed: a fixed wing endurance aircraft, which can stay airborne for multiple days and which even set the world record (81 hours) for this aspect, an outdoor rotorcraft which performs more targeted operations at lower altitudes and an indoor rotorcraft which is small, agile and intelligent enough to navigate in cluttered indoor environments.