Where To Next? The Future of Robotics

Self-Reconfigurable Robotics

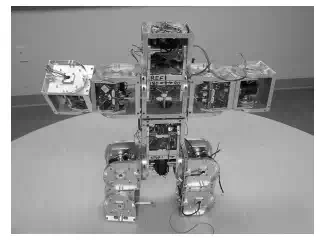

In theory at least, a swarm of tiny robots can get together to create any shape. In reality, robots are constructed with rigid parts and do not shape-shift easily. But an area of robotics research called reconfigurable robotics has been de- signing robots that have modules or components that can come together in various ways, creating not only different shapes but differently shaped robot bodies that can move around. Such robots have been demonstrated to shapeshift from being like six-legged insects to being like slithering snakes. We say “like” because six-legged insect robots and snakelike robots have been built before, and were much more agile and elegant than these reconfigurable robots. However, they could not shape-shift.

The mechanics of physical reconfiguration are complex, involving wires and connectors and physics, among other issues to consider and overcome. Individual modules require a certain amount of intelligence to know their state and role at various times and in various configurations. In some cases, the modules are all the same (homogeneous), while in others, they are not

A reconfigurable robot striking (just one) pose

(heterogeneous), which makes the coordination problem in some ways related to multi-robot control we learned about in Chapter 20. Reconfigurable robots that can autonomously decide when and how to change their shapes are called self-reconfigurable. Such systems are under active research for navigation in hard-to-reach places, and beyond.

Humanoid Robotics

Some bodies change shape, and others are so complicated that one shape is plenty hard to manage. Humanoid robots fit into that category. As we learned in Chapter 4, parts of the human body have numerous degrees of freedom (DOF), and control of high-DOF systems is very difficult, especially in the light of unavoidable uncertainty found in all robotics. Humanoid control brings together nearly all challenges we have discussed so far: all aspects of navigation and all aspects of manipulation, along with balance and, as you will see shortly, the intricacies of human-robot interaction.

There is a reason why it takes people so long to learn to walk and talk and be productive, in spite of our large brains; it is very, very hard. Human beings take numerous days, months, and years to acquire skills and knowledge,

Sarcos humanoid robot

while robots typically do not have that luxury. Through the development of sophisticated humanoid robots with complex biomimetic sensors and actuators, the field of robotics is finally having the opportunity to study humanoid control, and is gaining even more respect for the biological counterpart in the process.

Social Robotics And Human-Robot Interaction

Robotics is moving away from the factory and laboratory, and ever closer to human everyday environments and uses, demanding the development of robots capable of naturally interacting socially with people. The rather new field of human-robot interaction (HRI) is faced with a slew of challenges which include perceiving and understanding human behaviour in real time (Who is that talking to me? What is she saying? Is she happy or sad or giddy or mad? Is she getting closer or moving away?), responding in real-time (What should I say? What should I do?), and doing so in a socially appropriate and natural way that engages the human participant.

Humans are naturally social creatures, and robots that interact with us will need to be appropriately social as well if we are to accept them into our lives. When you think about social interaction, you probably immediately think about two types of signals: facial expressions and language. Based on what you know about perception in robotics, you may be wondering how robots will ever become social, given how hard it is for a moving robot to find, much less read, a moving face in real time (recall Chapter 9), or how hard it is for a moving target to hear and understand speech from a moving source in an environment with ambient noise.

Fortunately, faces and language are not the only ways of interacting socially. Perception for human-robot interaction must be broad enough to include human information processing, which consists of a variety of complex signals. Consider speech, for example: it contains important nuances in its rate, volume level, pitch, and other indicators of personality and feeling. All that is extremely important and useful even before the robot (or person) starts to understand what words are being said, namely before language processing.

Another important social indicator is the social use of space, called proxemics: how close one stands, along with body posture and movement (amount, size, direction, etc.), are rich with information about the social interaction. In some cases, the robot may have sensors that provide physiological responses, such as heart rate, blood pressure, body temperature, and galvanic skin response (the conductivity of the skin), which also give very useful cues about the interaction.

But while measuring the changes in heart rate and body temperature is very useful, the robot still needs to be aware of the person’s face in order to “look" at it. Social gaze, the ability to make eye contact, is critical for normal social interpersonal interaction, so even if the robot can’t understand the facial expression, it needs at least to point its head or camera to the face. Therefore, vision research is doing a great deal of work toward creating robots (and other machines) capable of finding and understanding faces as efficiently and correctly as possible. And then there is language. The field of natural language processing (NLP)is one of the early components of artificial intelligence (AI) , which split off early and has been making great progress. Unfortunately for robotics, the vast majority of success in NLP has been in written language, which is why search engines and data mining are very powerful already, but robots and computers still can’t understand what you are saying unless you say very few words they already know, very slowly and without an accent. Speech processing is a challenging field independent from NLP; the two sometimes work well together, and sometimes not, much the same as has been the case for robotics and AI. Great strides have been made in human speech processing, but largely for speech that can be recorded next to the mouth of the speaker. This works well for telephone systems, which can now detect when you are getting frustrated by endless menus ("If you’d like to learn about our special useless discount, please press 9" and the all-time favourite "Please listen to all the options, as our menu has changed"), by analysing the qualities of emotion in speech we mentioned above. However, it would be better if robot users did not have to wear a microphone to be understood by a robot.

The above just scrapes the surface of the many interesting problems in HRI. This brand-new field that brings together experts with backgrounds not only in robotics but also in psychology, cognitive science, communications, social science, and neuroscience, among others, will be great to watch as it develops.