THERMODYNAMICS

There are 4 laws to thermodynamics, and they are some of the most important laws in all of physics. The laws are as follows

· Zeroth law of thermodynamics – If two thermodynamic systems are each in thermal equilibrium with a third, then they are in thermal equilibrium with each other.

· First law of thermodynamics – Energy can neither be created nor destroyed. It can only change forms. In any process, the total energy of the universe remains the same. For a thermodynamic cycle the net heat supplied to the system equals the net work done by the system.

· Second law of thermodynamics – The entropy of an isolated system not in equilibrium will tend to increase over time, approaching a maximum value at equilibrium.

· Third law of thermodynamics – As temperature approaches absolute zero, the entropy of a system approaches a constant minimum.

Before I go over these laws in more detail, it will be easier if I first introduce Entropy.

Entropy is a very important thing in the realm of thermodynamics. It’s the core idea behind the second and third laws and shows up all over the place. Essentially entropy is the measure of disorder and randomness in a system. Here are 2 examples

· Let’s say you have a container of gas molecules. If all the molecules are in one corner then this would be a low entropy state (highly organised). As the particle move out and fill up the rest of the container then the entropy (disorder) increases.

· If you have a ball flying through the air then it will start off with its energy organised i.e. the kinetic energy of motion. As it moves through the air however, some of the kinetic energy is distributed to the air particles so the total entropy of system has increased (the total energy is conserved however, due to the first law)

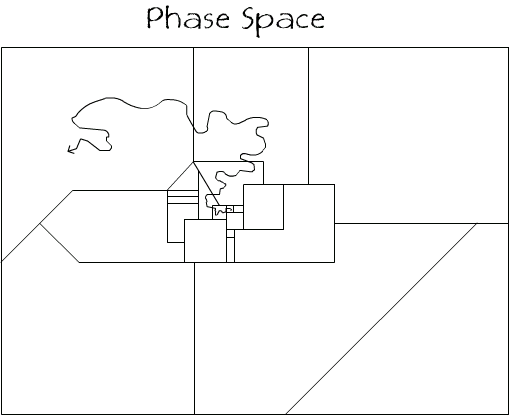

To get a more detailed picture of entropy we need to look at the concept of Phase Space. Some of the concepts for this may be a bit confusing but bear with me, once you’ve got your head round it it’s not that bad.

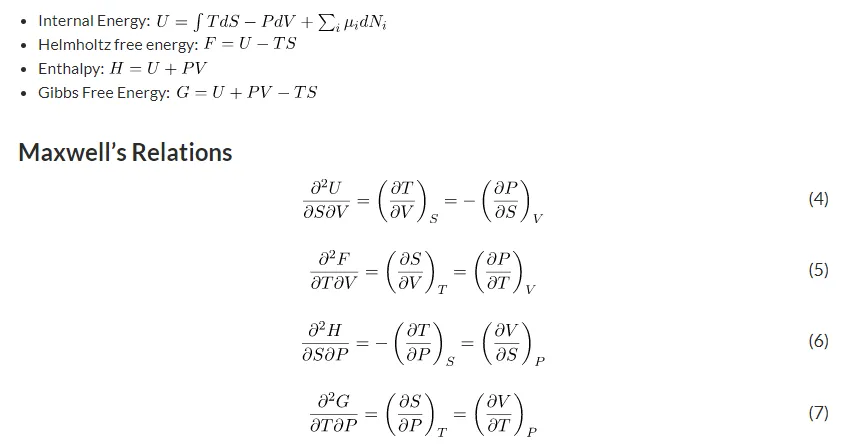

A phase space is just like a graph, but a point on this graph represents the whole state of a system. Let’s use an example. Imagine I have a box with 4 gas particles inside. Each point in the phase space for this system tells you where all 4 balls are located in the box.

In our example we are only interested in the positions of the 4 particles, so each point in phase space must contain an x, y, and z co-ordinate for each particle so our phase space is 3N dimensional, where N is the number of particles in the system. So in our case, the phase space is 12 dimensional, in order that each point can describe the location of 4 bodies.

In all the diagrams I will depict the phase space as 2D to make it easier to convey what it actually represents. For our purposes we will not need to consider the dimensions.

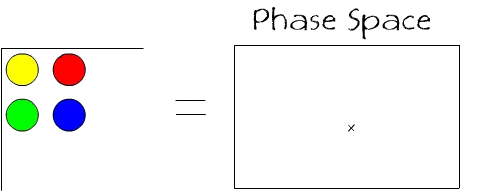

If we imagine that each of the particles is a different colour so we can keep track of their positions easier. If we imagine the case where all of the particles are located in one corner of the container then we have the situation

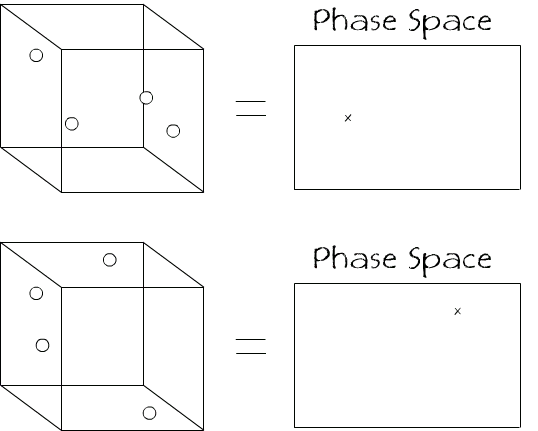

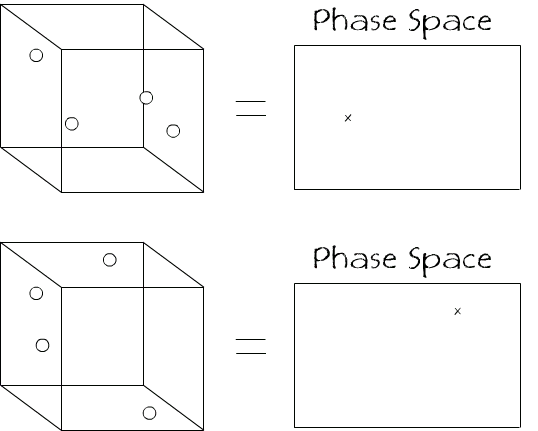

In terms of the system, there are multiple other combinations of the 4 particles that will be as organised as the above state

and so on. Each of these set-ups will correspond to a different position in phase space as they are all different layouts of the system of the 4 particles. If we add these to the phase space along with the original we get something like

These 5 layouts of the 4 particles, along with the 11 other combinations, make up a set of states that are (apart from the colours) indistinguishable. So in the phase space we could put a box around the 16 states that defines all the states inside it as being macroscopically indistinguishable.

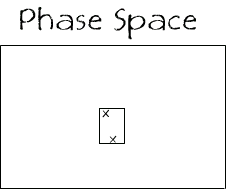

The total phase space of a system will have many regions all of different shapes and sizes and could look like the following

But how is all this abstract representation linked to entropy. Entropy, given in equations as the symbol S, is defined then as

Where ![]() is Boltzmann constant (

is Boltzmann constant ( and V is the volume of the box in phase space. All the points in a region of phase space have the same entropy, and the value of the entropy is related to the logarithm of the volume (originally Boltzmann never put the constant

and V is the volume of the box in phase space. All the points in a region of phase space have the same entropy, and the value of the entropy is related to the logarithm of the volume (originally Boltzmann never put the constant ![]() in the formula as he wasn’t concerned with the units. The insertion of the k seemed to have come first from Planck).

in the formula as he wasn’t concerned with the units. The insertion of the k seemed to have come first from Planck).

Entropy can also be defined as the change when energy is transfered at a constant temperature

Where ![]() is the change in entropy, Q is the energy or heat, and T is the constant temperature.

is the change in entropy, Q is the energy or heat, and T is the constant temperature.

The Zeroth law is so named as it came after the other 3. Laws 1, 2, and 3 had been around for a while before the importance of this law had been fully understood. It turned out that this law was so important and fundamental that it had to go before the other 3, and instead of renaming the already well known 3 laws they called the new one the Zeroth law and stuck it at the front of the list.

But what does it actually mean? The law states

“If two thermodynamic systems are each in thermal equilibrium with a third, then they are in thermal equilibrium with each other.”

Basically, if A=B and C=B then A=C. This may seem so obvious that is doesn’t need stating but without this law we couldn’t define temperature and we couldn’t build thermometers.

The first law of thermodynamics basically states that energy is conserved; it can neither be created nor destroyed, just changed from one for to another,

“The total amount of energy in an isolated system is conserved.”

The energy in a system can be converted to heat or work or other things, but you always have the same total that you started with.

As an analogy, think of energy as indestructible blocks. If you have 30 blocks, then whatever you do to or with the blocks you will always have 30 of them at the end. You cant destroy them, only move them around or divide them up, but there will always be 30. Sometimes you may loose one or more, but they still have to be taken account of because Energy is Conserved.

From the second law we can write that the change in the internal energy, U, of a system is equal to heat supplied to the system, Q, minus any work done by the system, W![]() ,

,

From the definition of entropy above we can replace ![]() , and we can also make the replacement

, and we can also make the replacement  giving us

giving us

Now if we have a system of particles that are different then we may get chemical reactions occuring, so we need to add one more term to take this into account

This is possibly the most famous (among scientists at least) and important laws of all science. It states;

“The entropy of the universe tends to a maximum.”

In other words Entropy either stays the same or gets bigger, the entropy of the universe can never go down.

The problem is, this sin’t always true. If you take our example of 4 atoms in a box then all of them being in one corner is a highly ordered system and so will have a low entropy, and then over time they’ll move around become more disordered and increasing the entropy. But there is nothing stopping them all randomly moving back to the corner. It’s incredible unlikely, but not actually impossible.

If you look at the problem in terms of phase space you can see that over time it’s more likely you’ll move into a bigger box, which means higher entropy, but there’s no actual barrier stopping you moving back into a smaller box.

The third law provides an absolute reference point for measuring entropy, saying that

“As the temperature of a system approaches absolute zero (−273.15°C, 0 K), then the value of the entropy approaches a minimum.”

The value of the entropy is usually 0 at 0K, however there are some cases where there is still a small amount of residual entropy in the system.

When you heat something, depending on what it’s made of, it takes a different about of time to heat up. Assuming that power remains constant, this must mean that some materials require more energy to raise their temperature by 1K (1K is actually the same as 1°C, they just start at a different place. For more information click here) than others. If you think about it, this makes sense. A wooden spoon takes a lot longer to heat up than a metal one. We say that metal is a good thermal conductor and wood a poor thermal conductor. The energy required to raise 1kg of a substance by 1K is called it’s specific heat capacity. The formula we use to find how much energy is required to raise 1 kg of a substance by 1K is:

where Q = Energy, M = mass, C = specific heat capacity and ![]() = change in temperature.

= change in temperature.

1a. Laura is cooking her breakfast before work on a Sunday morning (please send your sympathy messages that I had to work on a Sunday here). She doesn’t want to have to do any more washing up that is absolutely necessary, so decides to stir the spaghetti she is cooking with her fork, rather than have to wash a wooden spoon. She leaves the fork in the pan whilst she spreads her toast with margarine and grates some cheese. The stove provides 1000J of energy to the fork in the time she leaves it unattented. What would be the temperature increase in the fork, assuming half the energy provided will be lost to the surroundings and the initial temperature of the fork was 20°C, and the mass of the fork is 50g and is made of a material with a specific heat capacity of 460 Jkg-1K-1

Although I’m fairly sure I read somewhere that trying to work out energy changes in forks first thing in the morning was a symptom of insanity, it’s something I find myself doing from time to time. For this question, we’re going to need the equation Q=mcΔT this is an equation you’ll probably need a lot, so it’s worth trying to memorize it. It also springs up in chemistry too. First things first, we need to rearrange the equation to make ΔT the subject. Once you’ve rearranged this question you should get ΔT=Q/(mc). Substituting the values given to us in the question you get:

ΔT= 1000/(50 x 10-3 x 460)

ΔT= 43K

So since the initial temperature of the fork was 20°C, the final temperature of the fork would be 63°C.