A New Start for Optics; the Rise of Optical Computing (1945–1980)

Information optics is a recognized branch of optics since the fifties. However, historically, the knife-edge test by Foucault in 1859 can originate the optical information processing. Other contributors can be noted such as Abbe in 1873 who developed the theory of image formation in the microscope , or Zernike who presented in 1934 the phase contrast filter . In 1946, Duffieux made a major contribution with the publication of a book on the use of the Fourier methods in optics . This book was written in French and translated in English by Arsenault . The work by Maréchal is another major contribution; in 1953, he demonstrated the spatial frequency filtering under a coherent illumination .

Optical computing is based on a new way of analyzing the optical problems; indeed, the concepts of communications and information theory constitute the basis of optical information processing. In 1952, Elias proposed to analyze the optical systems with the tools of the communication theory . In an historical paper , Lohmann, the inventor of the computer-generated holography, wrote “In my view, Gabor’s papers were examples of physical optics, and the tools he used in his unsuccessful attempt to kill the twin image were physical tools, such as beam splitters. By contrast, Emmett (Leith) and I considered holography to be an enterprise in optical information processing. In our work, we considered images as information, and we applied notions about carriers from communications and information theory to separate the twin image from the desired one. In other words, our approach represented a paradigm shift from physical optics to optical information processing.”

Holography can be included into the domain of information optics since both fields are closely related and progressed together. As for holography invented by Gabor in 1948 , the development of optical image processing was limited until the invention of a coherent source of light, the laser, in 1960 .

The history of the early years of optical computing was published by major actors of the field; for example, in 1974 by Vander Lugt and in 2000 by Leith . Vander Lugt presents in his paper a complete state-of-the-art for coherent optical processing with 151 references. Several books give also a good overview of the state of the art at the time of their publication .

It is possible to distinguish two periods of time. First until the early seventies, there was a lot of enthusiasm for this new field of optics and information processing, all the basic inventions were made during this period and the potential of optics was very promising for real-time data processing. However real life optical processors were rare due to technological problems particularly with the SLMs. During the seventies, the research was more realistic with several attempts to build optical processors for real applications , and the competition with the computers was also much harder due to their progress .

The Funding Inventions

As soon as the laser was invented in 1960, optical information processing was in rapid expansion and all the major inventions of the field were made before 1970. In the Gabor hologram the different terms of the reconstruction were all on the same axis and this was a major drawback for the display. In 1962, Leith and Upatnieks introduced the off axis hologram that allowed the separation of the different terms of the reconstruction giving remarkable 3D reconstructions . This was the start of the adventure of practical holography.

In 1963, Vander Lugt proposed and demonstrated a technique for synthesizing the complex filter of a coherent optical processor using a Fourier hologram technique . This invention gave to the 4-f correlator, with the matched filter or other types of filter, all its power and generated all the research in the domain. In 1966, Weawer and Goodman presented the Joint Transform Correlator (JTC) architecture that will be widely used as an alternative to the 4-f correlator for pattern recognition.

In 1966, Lohmann revolutionized holography by introducing the first computer generated hologram (CGH) using a cell oriented encoding adapted to the limited power of the computers available at this time. This is the start of a new field and different cell oriented encodings were developed in the seventies. In 1969, a pure phase encoded CGH, the kinoform was also proposed opening the way to the modern diffractive optical elements with a high diffraction efficiency. A review of the state of the art of CGHs in 1978 was written by Lee . Until 1980, the CGHs encoding methods were limited by the power of the computers.

Character recognition by incoherent spatial filtering was introduced in 1965 by Armitage and Lohmann and Lohmann . A review of incoherent optical processing was made by Rhodes and Sawchuk in 1982 .

The Early Spatial Light Modulators

As already emphasized, a practical real-time optical processor can be constructed only if it is possible to control with the SLM, the amplitude and the phase modulation of the input plane or of the filter plane (in the case of a 4-f correlator). A very large number of SLMs have been investigated over the years, using almost all possible physical properties of matter to modulate light, but only few of them have survived and are now industrial products. A review of the SLMs in 1974 can be found in chapter 5 of the book written by Preston .

From the beginning, SLMs have primarily been developed for large screen display. Some of the early SLMs were based on the Pockels effect and two devices were very promising: the Phototitus optical converter with a DKDP crystal was used for large screen color display and also for optical pattern recognition, however the crystal had to work at the Curie point (0°C); the Pockels Readout Optical Modulator (PROM) was used for optical processing , but it was limited as it required blue light for writing and red light for readout. All these devices have disappeared, killed by their limitations.

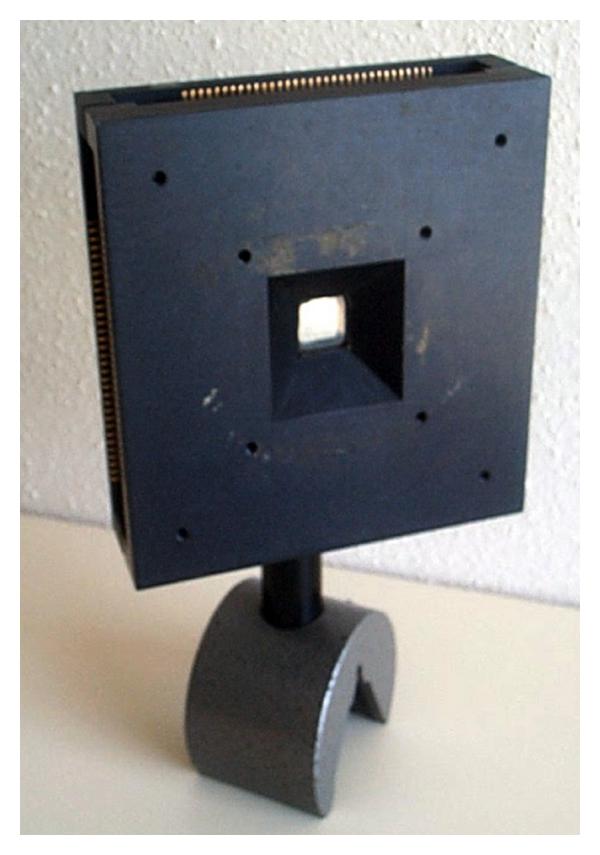

The liquid crystal technology has survived and is today the mostly used technology for SLMs. The first Liquid Crystal SLMs were developed in the late sixties, for example, an electrically addressed liquid crystal matrix of elements . Then the matrix size increased and in 1975, a matrix addressed SLM was constructed by LETI in France . This device was using a nematic liquid crystal and had a pixel pitch of 300 m, of cell thickness of 8 m, and the addressing signals were 120 V root mean square (RMS) and 10 V RMS (Figure 4). In 1978, Hughes Corp. developed a very successful optically addressed liquid crystal SLM or valve that was used in optical processors for more than 10 years [48–50]. However this SLM was very expensive, it has a 40 line pairs per millimeter resolution, its speed was limited to the video rate, and since it was optically addressed it had to be coupled to a cathode ray tube (CRT) making it particularly bulky if the input signal was electrical (Figure 5).

Figure 4

electrically addressed liquid crystal SLM constructed by LETI (France) in 1975.

Figure 5

Optically addressed liquid crystal light valve manufactured by Hughes Corp in 1978. The photo shows the light valve coupled to a CRT.

Other types of SLMs were developed in the seventies, such as deformable membrane SLMs which were presented by Knight in a critical review of the SLMs existing in 1980. He pointed out that, due to their limitations in 1980, they had only a limited use in coherent optical processing applications. However, this SLM technology is still used today for adaptive optics.

In conclusion, at the end of the seventies, despite all the research effort, no SLM really suited for real-time optical information processing was available.

A Successful Application: Radar Signal Optical Processing

The first coherent optical processor was dedicated to the processing of synthetic aperture radar (SAR) data. This first major research effort in coherent optical processing was initiated in 1953 at the University of Michigan under contracts with the US Army and the US Air Force . The processor has evolved successfully with different improved versions until the beginning of the seventies. The book by Preston as well as the reference paper by Leith will give to the reader an overview on the subject . Since the first processor was designed before the invention of the laser, it was powered by light generated by a mercury lamp. The system was not operating in real time, the input consisted of an input film transport which carried a photographic recording of incoming radar signals, the processing was carried out by a conical lens and the output was a film. At the beginning of the seventies, the digital computers were able to compete successfully with the coherent optical computers for SAR applications and finally they won and this was sadly the end of radar signal optical processing.

The Future of Optical Information Processing as Seen in 1962

In order to understand the evolution of optical computing, it is enlightening to see the topics of discussions in the early sixties. For example, in October 1962, a “Symposium on Optical Processing of Information” was held in Washington DC, cosponsored by the Information System Branch of the Office of Naval Research and the American Optical Company. About 425 scientists attended this meeting and Proceedings were published . The preface of the proceedings shows that the purpose of this symposium was to bring together researchers from the fields of optics and information processing. The authors of the preface recognize that optics can be used for special-purpose optical processors in the fields of pattern recognition, character recognition, and information retrieval, since optical systems offer in these cases the ability to process many items in parallel. The authors continue with the question of a general-purpose computer. They write: “Until recently, however, serious consideration has not been given to the possibility of developing a general-purpose optical computer. With the discovery and application of new optical effects and phenomena such as laser research and fiber optics, it became apparent that optics might contribute significantly to the development of a new class of high-speed general-purpose digital computers”. It is interesting to list the topics of the symposium: optical effects (spatial filtering, laser, fiber optics, modulation and control, detection, electroluminescent, and photoconductive) and data processing (needs, biological systems, bionic systems, photographic, logical systems, optical storage systems, and pattern recognition). It can be noted, that one of the speakers, Teager from MIT, pointed out that for him the development of an optical general-purpose computer was highly premature because the optical technology was not ready in order to compete with the electronic computers. For him, the optical computers will have a different form than the electronic computers; they will be more parallel. It is interesting to see that this debate is now almost closed, and today, 47 years after this meeting, it is widely accepted that a general-purpose pure optical processor will not exist but that the solution is to associate electronics and optics and to use optics only if it can bring something that electronic cannot do.

Optical Memory and the Memory of the Electronic Computers

It is useful to replace the research on optical memories in the context of the memories available in the sixties. At this time the central memory of the computers was a core memory and compact memory cells were available using this technology. However, the possible evolution of this technology was limited. The memory capacity was low, for example, the Apollo Guidance Computer (AGC), introduced in 1966, and used in the lunar module that landed on the moon, had a memory of 2048 words RAM (magnetic core memory), 36864 words ROM (core rope memory), with16-bit words. In October 1970, Intel launched the first DRAM (Dynamic Random Access Memory) the Intel 1103 circuit. This chip had a capacity of one Kbit using P-channel silicon gate MOS technology with a maximum access time of 300 ns and a minimum write time of 580 ns. This chip killed the core memory.

Compared to the memories that were available, optical memories had two attractive features: a potential high density and the possibility of parallel access. Already in 1963, van Heerden developed the theory of the optical storage in 3D materials . In 1968 the Bell Labs constructed the first holographic memory with a holographic matrix of 32 by 32 pages of 64 by 64 bits each. In 1974, d'Auria et al. from Thomson-CSF constructed the first complete 3D optical memory system storing the information into a photorefractive crystal with angular multiplexing and achieving the storage of 10 pages of 104 bits. Holographic memories using films were also developed in the US. Synthetic holography has been applied to recording and storage of digital data in the Human Read/Machine Read (HRMR) system developed by Harris Corporation in 1973 for Rome Air Development Center.

Optical Fourier Transform Processors, Optical Pattern Recognition

Optical pattern recognition was from the beginning a prime choice for optical processing since it was using fully the parallelism of optics and the Fourier transform properties. The book edited by Stark in 1982 gives a complete overview of the state of the art of the applications of optical Fourier transforms. It can be seen that coherent, incoherent; space-invariant, space-variant; linear, nonlinear architectures were used for different applications. Hybrid processors, optical/digital emerged as a solution for practical implementation and for solving real problems of data processing and pattern recognition. For example, Casasent, who was very active in this field, wrote a detailed book chapter [58] with a complete review of hybrid processors at the beginning of the eighties. All these processors gave very promising results. Almost all the proposed processors remained laboratory prototypes and never had a chance to replace electronic processors, even if at that time, electronics and computers were much less powerful. There are many reasons for this; the number of applications that could benefit from the speed of optical processors was perhaps not large enough, but the main reason was the absence of powerful, high speed, high quality, and also affordable SLMs, for the input plane of the processor as well as for the filter plane. Optical processors are also less flexible than digital computers that allow a larger number of data manipulations very easily.