Multimedia Authoring

Multimedia authoring is the creation of multimedia productions, sometimes called "movies" or "presentations". Since we are interested in this subject from a computer science point of view, we are mostly interested in interactive applications. Also, we need to consider still - image editors, such as Adobe Photoshop, and simple video editors, such as Adobe Premiere, because these applications help us create interactive multimedia projects.

How much interaction is necessary or meaningful depends on the application. The spectrum runs from almost no interactivity, as in a slide show, to full - immersion virtual reality.

In a slide show, interactivity generally consists of being able to control the pace (e.g., click to advance to the next slide). The next level of interactivity is being able to control the sequence and choose where to go next. Next is media control: start / stop video, search text, scroll the view, zoom. More control is available if we can control variables, such as changing a database search query.

The level of control is substantially higher if we can control objects — say, moving objects around a screen, playing interactive games, and so on. Finally, we can control an entire simulation: move our perspective in the scene, control scene objects. For some time, people have indeed considered what should go into a multimedia project; references are given at the end of this chapter.

In this section, we shall look at

1. Multimedia authoring metaphors

2. Multimedia production

3. Multimedia presentation

4. Automatic authoring

The final item deals with general authoring issues and what benefit automated tools, using some artificial intelligence techniques, for example, can bring to the authoring task. As a first step, we consider programs that carry out automatic linking for legacy documents.

After an introduction to multimedia paradigms, we present some of the practical tools of multimedia content production — software tools that form the arsenal of multimedia production. Here we go through the nuts and bolts of a number of standard programs currently in use.

Multimedia Authoring Metaphors

Authoring is the process of creating multimedia applications. Most authoring programs use one of several authoring metaphors, also known as authoring paradigms: metaphors for easier understanding of the methodology employed to create multimedia applications. Some common authoring metaphors are as follows:

· Scripting language metaphor

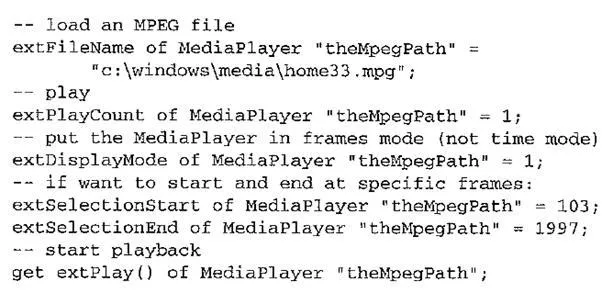

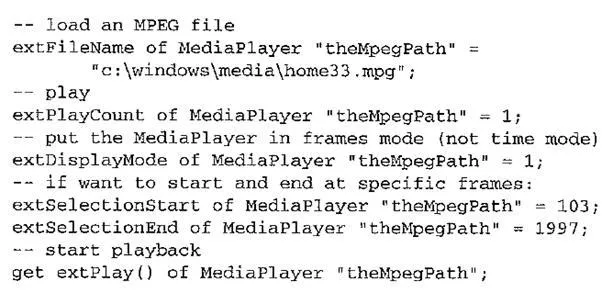

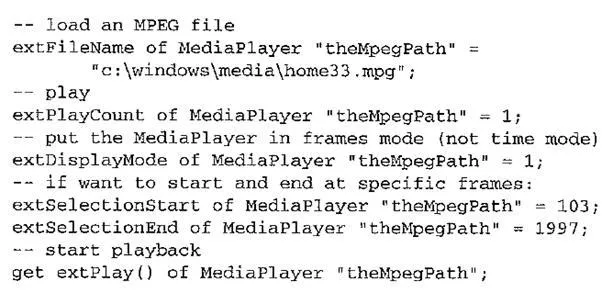

The idea here is to use a special language to enable interactivity (buttons, mouse, etc.) and allow conditionals, jumps, loops, functions / macros, and so on. An example is the OpenScript language in Asymetrix Learning Systems' Toolbook program. Open - Script looks like a standard object - oriented, event - driven programming language. For example, a small Toolbook program is shown below. Such a language has a learning curve associated with it, as do all authoring tools — even those that use the standard C programming language as their scripting language — because of the object libraries that must be learned.

· Slide show metaphor

Slide shows are by default a linear presentation. Although tools exist to perform jumps in slide shows, few practitioners use them. Example programs are PowerPoint or ImageQ.

· Hierarchical metaphor

Here, user controllable elements are organized into a tree structure. Such a metaphor is often used in menu - driven applications.

· Iconic / flow - control metaphor

Graphical icons are available in a toolbox, and authoring proceeds by creating a flowchart with icons attached. The standard example of such a metaphor is Author - ware, by Macromedia. The following figure shows an example flowchart. As well as simple flowchart elements, such as an IF statement, a CASE statement, and so on, we can group elements using a Map (i.e., a subroutine) icon. With little effort, simple animation is also possible.

Authorware flowchart

· Frames metaphor

As in the iconic / flow - control metaphor, graphical icons are again available in a tool box, and authoring proceeds by creating a flowchart with icons attached. However, rather than representing the actual flow of the program, links between icons are more conceptual. Therefore, "frames" of icon designs represent more abstraction than in the simpler iconic / flow - control metaphor. An example of such a program is Quest, by Allen Communication.

The flowchart consists of "modules" composed of "frames". Frames are constructed from objects, such as text, graphics, audio, animations, and video, all of which can respond to events. A real benefit is that the scripting language here is the widely used programming language C. The following figure shows a Quest frame.

· Card / scripting metaphor

This metaphor uses a simple index card structure to produce multimedia productions. Since links are available, this is an easy route to producing applications that use hypertext or hypermedia. The original of this metaphor was HyperCard by Apple. Another example is HyperStudio by Knowledge Adventure. The latter program is now used in many schools.

· Cast / score / scripting metaphor

In this metaphor, time is shown horizontally in a type of spreadsheet fashion, where rows, or tracks, represent instantiations of characters in a multimedia production. Since these tracks control synchronous behavior, this metaphor somewhat parallels a music score. Multimedia elements are drawn from a "cast" of characters, and "scripts"

Quest frame

are basically event procedures or procedures triggered by timer events. Usually, you can write your own scripts. In a sense, this is similar to the conventional use of the term "scripting language" — one that is concise and invokes lower - level abstractions, since that is just what one's own scripts do. Director, by Macromedia, is the chief example of this metaphor. Director uses the Lingo scripting language, an object - oriented, event - driven language.

Multimedia Production

A multimedia project can involve a host of people with specialized skills. In this book we focus on more technical aspects, but multimedia production can easily involve an art director, graphic designer, production artist, producer, project manager, writer, user interface designer, sound designer, videographer, and 3D and 2D animators, as well as programmers.

Two cards in a HyperStudio stack

The production timeline would likely only involve programming when the project is about 40% complete, with a reasonable target for an alpha version (an early version that does not contain all planned features) being perhaps 65 - 70% complete. Typically, the design phase consists of storyboarding, flowcharting, prototyping, and user testing, as well as a parallel production of media. Programming and debugging phases would be earned out in consultation with marketing, and the distribution phase would follow.

A storyboard depicts the initial idea content of a multimedia concept in a series of sketches. These are like "keyframes" in a video — the story hangs from these "stopping places". A flowchart organizes the storyboards by inserting navigation information — the multimedia concept's structure and user interaction. The most reliable approach for planning navigation is to pick a traditional data structure. A hierarchical system is perhaps one of the simplest organizational strategies.

Multimedia is not really like other presentations, in that careful thought must be given to organization of movement between the "rooms" in the production. For example, suppose we are navigating an African safari, but we also need to bring specimens back to our museum for close examination — just how do we effect the transition from one locale to the other? A flowchart helps imagine the solution.

The flowchart phase is followed by development of a detailed functional specification. This consists of a walk - through of each scenario of the presentation, frame by frame, including all screen action and user interaction. For example, during a mouseover for a character, the character reacts, or a user clicking on a character results in an action.

The final part of the design phase is prototyping and testing. Some multimedia designers use an authoring tool at this stage already, even if the intermediate prototype will not be used in the final product or continued in another tool. User testing is, of course, extremely important before the final development phase.

Multimedia Presentation

In this section, we briefly outline some effects to keep in mind for presenting multimedia content as well as some useful guidelines for content design.

Graphics Styles Careful thought has gone into combinations of color schemes and how lettering is perceived in a presentation. Many presentations are meant for business displays, rather than appealing on a screen. Human visual dynamics are considered in regard to how such presentations must be constructed.

Color Principles and Guidelines Some color schemes and art styles are best combined with a certain theme or style. Color schemes could be, for example, natural and floral for outdoor scenes and solid colors for indoor scenes. Examples of art styles are oil paints, watercolors, colored pencils, and pastels.

A general hint is to not use too many colors, as this can be distracting. It helps to be consistent with the use of color — then color can be used to signal changes in theme.

Fonts For effective visual communication, large fonts (18 to 36 points) are best, with no more than six to eight lines per screen. Sans serif fonts work better than serif fonts (serif fonts are those with short lines stemming from and at an angle to the upper and lower ends of a letter's strokes).

The top figure shows good use of color and fonts. It has a consistent color scheme, uses large and all sans - serif (Arial) fonts. The bottom figure is poor, in that too many colors are used, and they are inconsistent. The red adjacent to the blue is hard to focus on, because the human retina cannot focus on these colors simultaneously. The serif (Times New Roman) font is said to be hard to read in a darkened, projection setting. Finally, the lower right panel does not have enough contrast — pretty pastel colors are often usable only if their background is sufficiently different.

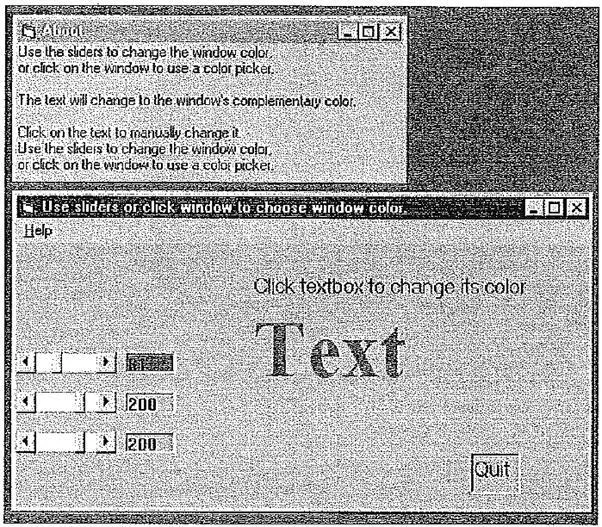

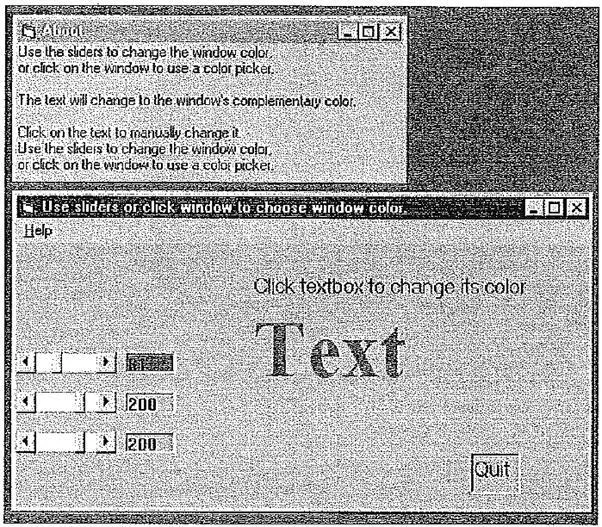

A Color Contrast Program Seeing the results of Vetter et al.'s research, we constructed a small Visual Basic program to investigate how readability of text colors depends on color and the color of the background (see the Further Exploration section at the end of this chapter for a pointer to this program on the text web site. There, both the executable and the program source are given).

The simplest approach to making readable colors on a screen is to use the principal complementary color as the background for text. For color values in the range 0 to 1 (or, effectively, 0 to 255), if the text color is some triple (R, G, B), a legible color for the background is likely given by that color subtracted from the maximum:

(R, G, B) =* (1 - R, 1 - G, 1 - B)

That is, not only is the color "opposite" in some sense (not the same sense as artists use), but if the text is bright, the background is dark, and vice versa.

In the Visual Basic program given, sliders can be used to change the background color. As the background changes, the text changes to equal the principal complementary color. Clicking on the background brings up a color - picker as an alternative to the sliders.

If you feel you can choose a better color combination, click on the text. This brings up a color picker not tied to the background color, so you can experiment. (The text itself can also be edited.) A little experimentation shows that some color combinations are more pleasing than others — for example, a pink background and forest green foreground, or a green background and mauve foreground. An artist's color wheel will not look the same, as it is based on feel rather than on an algorithm. In the traditional artist's wheel, for example, yellow is opposite magenta, instead of opposite and blue is instead opposite orange.

Program to investigate colors and readability

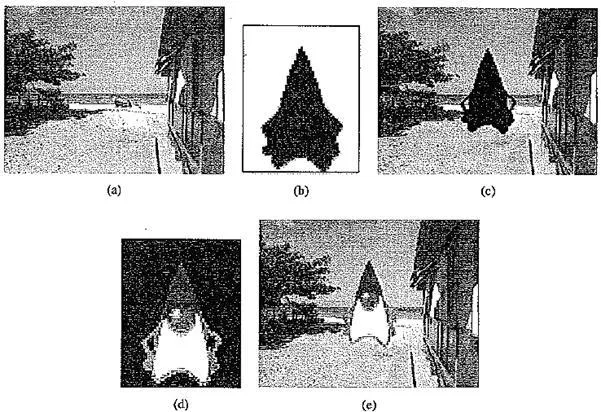

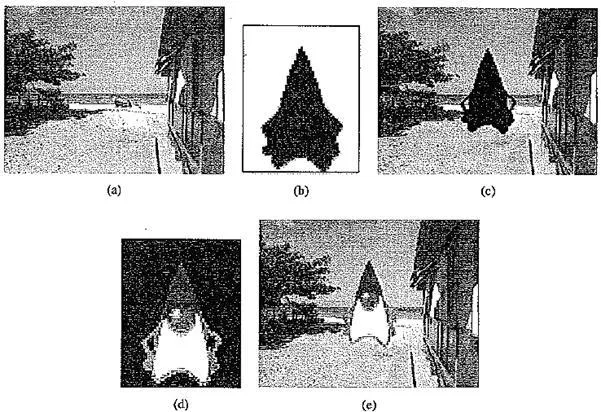

Sprite Animation Sprites are often used in animation. For example, in Macromedia Director, the notion of a sprite is expanded to an instantiation of any resource. However, the basic idea of sprite animation is simple.

Now we can overlay the sprite on a colored background B by first ANDing B and M, then ORing the result with 5, with the final result. Operations are available to carry out these simple compositing manipulations at frame rate and so produce a simple 2D animation that moves the sprite around the frame but does not change the way it looks.

Color wheel. (This figure also appears in the color insert section.)

Video Transitions Video transitions can be an effective way to indicate a change to the next section. Video transitions are syntactic means to signal "scene changes" and often carry semantic meaning. Many different types of transitions exist; the main types are cuts, wipes, dissolves, fade - ins and fade - outs.

A cut, as the name suggests, carries out an abrupt change of image contents in two consecutive video frames from their respective clips. It is the simplest and most frequently used video transition.

A wipe is a replacement of the pixels in a region of the viewport with those from another video. If the boundary line between the two videos moves slowly across the screen, the second video gradually replaces the first. Wipes can be left - to - right, right - to - left, vertical, horizontal, like an iris opening, swept out like the hands of a clock, and so oh.

Sprite animation: (a) Background B (b) Mask M; (c) B AND M (d) Sprite S; (e) B AND M OR S

A dissolve replaces every pixel with a mixture over time of the two videos, gradually changing the first to the second. A fade - out is the replacement of a video by black (or white), and fade - in is its reverse. Most dissolves can be classified into two types, corresponding, for example, to cross dissolve and dither dissolve in Adobe Premiere video editing software.

In type I (cross dissolve), ever pixel is affected gradually. It can be defined by

where A and B are the color 3 - vectors for video A and video B. Here, a(t) is a transition function, which is often linear with time f:

Type n (dither dissolve) is entirely different. Determined by a(t), increasingly more and more pixels in video A will abruptly (instead of gradually, as in Type I) change to video B. The positions of the pixels subjected to the change can be random or sometimes follow a particular pattern.

Obviously, fade - in and fade - out are special types of a Type I dissolve, in which video A or B is black (or white). Wipes are special forms of a Type II dissolve, in which changing pixels follow a particular geometric pattern.

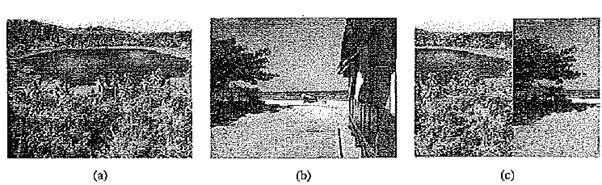

(a) Video/,; (b) Video/?; (c) Video/, sliding into place and pushing out Videos

Despite the fact that many digital video editors include a preset number of video transitions, we may also be interested in building our own. For example, suppose we wish to build a special type of wipe that slides one video out while another video slides in to replace it. The usual type of wipe does not do this. Instead, each video stays in place, and the transition line moves across each "stationary" video, so that the left part of the viewport shows pixels from the left video, and the right part shows pixels from the right video (for a wipe moving horizontally from left to right).

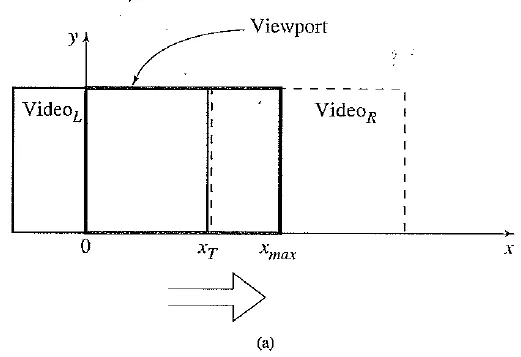

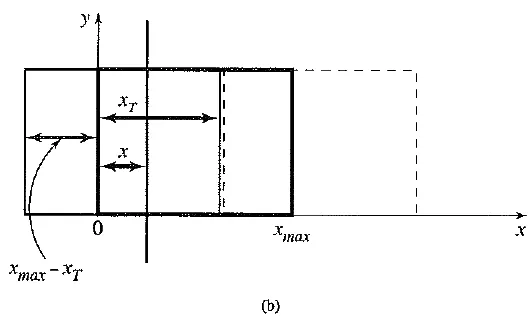

Suppose we would like to have each video frame not held in place, but instead move progressively farther into (out of) the viewport: we wish to slide Video, in from the left and push out Video?. The above figure shows this process. Each of Video /, and Videos has its own values of R, G, and B. Note that R is a function of position in the frame, (x, y), as well as of time t. Since this is video and not a collection of images of various sizes, each of the two videos has the same maximum extent, xmax. (Premiere actually makes all videos the same size — the one chosen in the preset selection — so there is no cause to worry about different sizes.)

As time goes by, the horizontal location xj for the transition boundary moves across the viewport from xt — 0 at t — 0 to xj = xmax at / = tmax. Therefore, for a transition that is linear in time, xT - (t/tmax)xmax.

So for any time t, the situation is as shown in the following figure. The viewport, in which we shall be writing pixels, has its own coordinate system, with the .Y - axis from 0 to xmax For each x (and y) we must determine (a) from which video we take RGB values, and (b) from what . v position in the unmoving video we take pixel values — that is, from what position x from the left video, say, in its own coordinate system. It is a video, so of course the image in the left video frame is changing in time.

Let's assume that dependence on y is implicit. In any event, we use the same y as in the source video. Then for the red channel (and similarly for the green and blue), R = R(x,t). Suppose we have determined that pixels should come from VideoL. Then the x - position xl in the unmoving video should be xL = x + {xmax — xT), where v is the position we are trying to fill in the viewport, xj is the position in the viewport that the transition boundary has reached, and - xmax, T is the maximum pixel position for any frame.

(a) Geometry of Videos pushing out Videos; (b) Calculating position in Video from where pixels are copied to the viewport

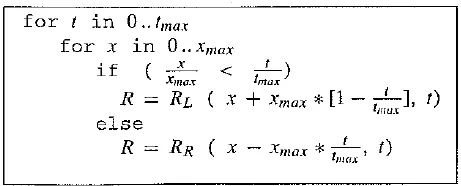

Substituting the fact that the transition moves linearly with time, xj — xmax(t/tmax), we can set up a pseudocode solution as in the following figure. In this figure, the slight change in formula if pixels are actually coming from Videos instead of from Videos is easy to derive.

SomeTechnical Design Issues Technical parameters that affect the design and delivery of multimedia applications include computer platform, video format and resolution, memory and disk space, delivery methods.

· Computer Platform. Usually we deal with machines that are either some type of UNIX box (such as a Sun) or else a PC or Macintosh. While a good deal of software is ostensibly "portable", much cross - platform software relies on runtime modules that may not work well across systems.

Pseudocode for slide video transition

· Video Format and Resolution. The most popular video formats are NTSC, PAL, and SEC AM. They are not compatible, so conversion is required to play a video in a different format.

The graphics card, which displays pixels on the screen, is sometimes referred to as a "video card". In fact, some cards are able to perform "frame grabbing", to change analog signals to digital for video. This kind of card is called a "video capture card".

The graphics card's capacity depends on its price. An old standard for the capacity of a card is S - VGA, which allows for a resolution of 1,280 x 1,024 pixels in a dis played image and as many as 65,536 colors using 16 - bit pixels or 16.7 million colors using 24 - bit pixels. Nowadays, graphics cards that support higher resolution, such as 1,600 x 1,200, and 32 - bit pixels or more are common.

· Memory and Disk Space Requirement. Rapid progress in hardware alleviates the problem, but multimedia software is generally greedy. Nowadays, at least 128 megabytes of RAM and 20 gigabytes of harddisk space should be available for acceptable performance and storage for multimedia programs.

· Delivery Methods. Once coding and all other work is finished, how shall we present our clever work? Since we have presumably purchased a large disk, so that performance is good and storage is not an issue, we could simply bring along our machine and show the work that way. However, we likely wish to distribute the work as a product. Presently, rewritable DVD drives are not the norm, and CD - ROMs may lack sufficient storage capacity to hold the presentation. Also, access time for CD - ROM drives is longer than for harddisk drives.

Electronic delivery is an option, but this depends on network bandwidth at the user side (and at our server). A streaming option may be available, depending on the presentation.

No perfect mechanism currently exists to distribute large multimedia projects. Nevertheless, using such tools as PowerPoint or Director, it is possible to create acceptable presentations that fit on a single CD - ROM.

Automatic Authoring

Thus far, we have considered notions developed for authoring new multimedia. Nevertheless, a tremendous amount of legacy multimedia documents exists, and researchers have been interested in methods to facilitate automatic authoring. By this term is meant either an advanced helper for creating new multimedia presentations or a mechanism to facilitate automatic creation of more useful multimedia documents from existing sources.

Hypermedia Documents: Hypermedia documents are computer based documents that contain text, graphics, audio and video on pages that are connected by navigational links. The navigational links, often referred to as the hyperlinks, permit nonsequential or nonlinear traversal of the document by the readers. A well - known source of hypermedia documents is the so called World Wide Web (WWW) or simply the Web.

Hypermedia documents allow multiple views and efficient, nonlinear exploration of information that are not possible with traditional books. On the other hand, the absence of an obvious linear structure and physical medium make it very easy to get lost in the hyperspace.

Hypermedia documents may be printed. Nevertheless, the hyperlinking functionality is typically lost in the printed copy. Most hypermedia documents especially those on the Web are intended for viewing on the screen and designed to exploit the hyperlinking functionality. As a result, readability also suffers with the loss of the hyperlinks. For instance, removing the hyperlink to the definition of an unfamiliar term may make a description unclear to the readers.

Exteriorization versus Linearization

Now, using Microsoft Word, say, it is trivial to create a hypertext version of one's document, as Word simply follows the layout already set up in chapters, headings, and so on. But problems arise when we wish to extract semantic content and find links and anchors, even considering just text and not images.

Once a dataset becomes large, we should employ database methods. The issues become focused on scalability (to a large dataset), maintainability, addition of material, and reusability. The database information must be set up in such a way that the "publishing" stage, presentation to the user, can be carried out just - in - time, presenting information in a user - defined view from an intermediate information structure.

Semiautomatic Migration of Hypertext The structure of hyperlinks for text information is simple: "nodes" represent semantic information and are anchors for links to other pages.

For text, the first step for migrating paper - based information to hypertext is to automatically convert the format used to HTML. Then, sections and chapters can be placed in a database. Simple versions of data mining techniques, such as word stemming, can easily be used to parse titles and captions for keywords — for example, by frequency counting. Keywords found can be added to the database being built. Then a helper program can automatically generate additional hyperlinks between related concepts.

A semiautomatic version of such a program is most likely to be successful, making suggestions that can be accepted or rejected and manually added to. A database management system can maintain the integrity of links when new nodes are inserted. For the publishing stage, since it may be impractical to recreate the underlying information structures, it is best to delay imposing a viewpoint on the data until as late as possible.

Hyperimages Matters are not nearly so straight forward when considering image or other multimedia data. To treat an image in the same way as text, we would wish to consider an image to be a node that contains objects and other anchors, for which we need to determine image entities and rules, what we desire is an automated method to help us produce true hypermedia.

It is possible to manually delineate syntactic image elements by masking image areas. These can be tagged with text, so that previous text - based methods can be brought into play. Naturally, we are also interested in what tools from database systems, data mining, artificial intelligence, and so on can be brought to bear to assist production of full - blown multimedia systems, not just hypermedia systems. The above discussion shows that we are indeed at the start of such work.