Continuous communication, unlike discrete communication, deals with signals that have potentially an infinite number of different values. Continuous communication is closely related to discrete communication (in the sense that any continuous signal can be approximated by a discrete signal), although the relationship is sometimes obscured by the more sophisticated mathematics involved.

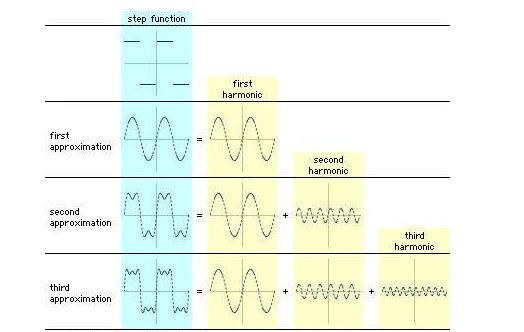

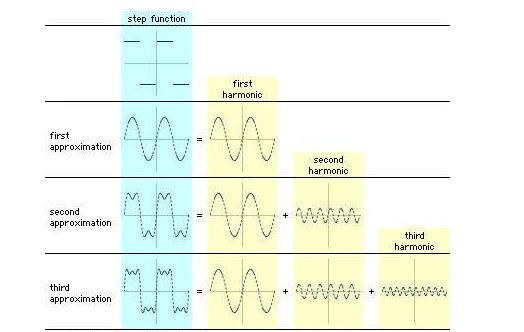

The most important mathematical tool in the analysis of continuous signals is Fourier analysis, which can be used to model a signal as a sum of simpler sine waves. The figure indicates how the first few stages might appear. It shows a square wave, which has points of discontinuity (“jumps”), being modeled by a sum of sine waves. The curves to the right of the square wave show what are called the harmonics of the square wave. Above the line of harmonics are curves obtained by the addition of each successive harmonic; these curves can be seen to resemble the square wave more closely with each addition. If the entire infinite set of harmonics were added together, the square wave would be reconstructed almost exactly. Fourier analysis is useful because most communication circuits are linear, which essentially means that the whole is equal to the sum of the parts. Thus, a signal can be studied by separating, or decomposing, it into its simpler harmonics.

An example of Fourier analysisUsing Fourier analysis, a step function is modeled, or decomposed, as the sum of various sine functions. This striking example demonstrates how even an obviously discontinuous and piecewise linear graph (a step function) can be reproduced to any desired level of accuracy by combining enough sine functions, each of which is continuous and nonlinear.

A signal is said to be band-limited or bandwidth-limited if it can be represented by a finite number of harmonics. Engineers limit the bandwidth of signals to enable multiple signals to share the same channel with minimal interference. A key result that pertains to bandwidth-limited signals is Nyquist’s sampling theorem, which states that a signal of bandwidth B can be reconstructed by taking 2B samples every second. In 1924, Harry Nyquist derived the following formula for the maximum data rate that can be achieved in a noiseless channel:Maximum Data Rate = 2 B log2 V bits per second,where B is the bandwidth of the channel and V is the number of discrete signal levels used in the channel. For example, to send only zeros and ones requires two signal levels. It is possible to envision any number of signal levels, but in practice the difference between signal levels must get smaller, for a fixed bandwidth, as the number of levels increases. And as the differences between signal levels decrease, the effect of noise in the channel becomes more pronounced.

Every channel has some sort of noise, which can be thought of as a random signal that contends with the message signal. If the noise is too great, it can obscure the message. Part of Shannon’s seminal contribution to information theory was showing how noise affects the message capacity of a channel. In particular, Shannon derived the following formula:Maximum Data Rate = B log2(1 + S/N) bits per second,where B is the bandwidth of the channel, and the quantity S/N is the signal-to-noise ratio, which is often given in decibels (dB). Observe that the larger the signal-to-noise ratio, the greater the data rate. Another point worth observing, though, is that the log2 function grows quite slowly. For example, suppose S/N is 1,000, then log2 1,001 = 9.97. If S/N is doubled to 2,000, then log2 2,001 = 10.97. Thus, doubling S/N produces only a 10 percent gain in the maximum data rate. Doubling S/N again would produce an even smaller percentage gain.