What Is LiDAR and How

Can I Use It?

LiDAR is the practice of

using light or non-visible (e.g., infrared) electromagnetic radiation to detect

and measure distance to objects. LiDAR stands for LIght Detection And Ranging.

You've probably heard of

LiDAR before, but you may not really know much about it. My goal in this

article is to explain on a conceptual level how LiDAR works.

Applications of LiDAR

LiDAR shows up in

documentaries and news articles fairly often because it plays a role in so many

scientific fields.

LiDAR is used in everything

from agriculture to meteorology, biology to robotics, and law enforcement to

solar photovoltaics deployment. You might see LiDAR referenced in reports about

astronomy and spaceflight or you might hear about its use in mining operations.

LiDAR can image small things

like historic relics for archaeology or skeletons for biology. On the other end

of the spectrum, LiDAR can also image very large things like landscapes for

agriculture and geology.

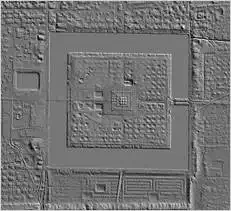

Aerial LiDAR image of Angkor Wat. Image

courtesy of Ancient Explorers.

Then you have LiDAR systems

that image moving objects or may be moving, themselves. These non-static

systems may become the most common form of LiDAR as they are used for machine

vision in military systems, survey aircraft, and prototype autonomous cars.

Yet other forms of LiDAR are

not used to image solid surfaces at all: NASA uses LiDAR in atmospheric

research while other LiDAR systems are designed to function and image surfaces

underwater.

Clearly, this technology is

quite flexible.

Similarities to SONAR and RADAR

So if LiDAR is LIght Detection And Ranging,

is it similar to SONAR (SOund Navigation And

Ranging) and RADAR (RAdio Detection And

Ranging)? Yes, sort of. To understand how the three are alike, let us start by

talking about how SONAR (echolocation) and RADAR work.

SONAR emits a powerful pulsed

sound wave of known frequency. Then, by timing how long it takes the pulse to return,

you can measure a distance. Doing this repeatedly can help you get a good

feeling for your surroundings.

SONAR imaging used to locate a sunken

vessel at sea. Image courtesy of the NOAA Office of

Exploration and Research.

The key element of SONAR is

the sound wave, but there are many different types of waves. If we use the same

principal and change the type of wave from a sound wave to an electromagnetic

wave (radio), you get RADAR (RAdio Detection And Ranging).

There are many different

types of RADAR that use different frequencies of the EM spectrum. Light is just

a special range of wavelengths detectable by the human eye, which is one of the

things that differentiates LiDAR from RADAR. There are other differences

between the two, but I'm going to focus on spatial resolution as the key

difference.

RADAR uses a large wavefront and long wavelengths, giving poor

resolution. By comparison, LiDAR uses lasers (tight wavefront)

and much shorter wavelengths. The wavelength directly dictates the resolving

power of an imaging system: Shorter wavelengths (corresponding to higher

frequencies) increase the resolving power.

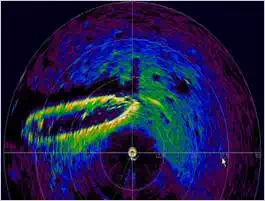

Weather RADAR imaging picking up the

movement of weather systems and migrating birds. Image courtesy of NASA.

And if your wavefront is huge, you have to wonder—what distance

are you measuring?

Imagine trying to use a

flashlight range finder for your next home improvement project. Is that

flashlight measuring the distance to that beam or the wall behind it? This is

too imprecise to be practical. Laser range finders, however, are much more

accurate.

Measuring with Pixels

LiDAR uses a laser to measure

distance, but that is not any different from a laser range finder—you point it

at something and you measure a distance. But what if that one distance

measurement were interpreted as a single pixel? You could then take numerous

distance measurements and lay them out in a grid; the result would be an image

that conveys depth, analogous to a black-and-white photograph in which the

pixels convey light intensity. Sounds pretty cool, and that could be pretty

useful right?

But we still have several

questions to answer before we can fully understand how this system works.

How many pixels do we need?

If we compare LiDAR to early

digital cameras, we would need one or two megapixels (or one to two millionpixels). So let's say we need two million

lasers, then we would need to measure the distance indicated by every one of

those lasers, so another two million sensors, and then circuits to do the

calculations.

Maybe a whole lot of lasers

at once is not the best method. What if, instead of trying to take a whole

"picture" at once, we did what scanners do? Namely, could we take

part of the picture and then move the pixel and take the picture again? This

sounds like a much simpler device that could be implemented with only one laser

and one detector. However, it also means that we cannot take an instant

"picture" the way we can with a camera.

How do we move our pixel?

In a scanner, the physical

pixel is moved along the image. But that would not be practical in many

situations, so we probably need a different method.

With LiDAR, we are trying to

take a "picture in depth", or you could say we are trying to take a

"3D model".

If you were paying attention

in your math classes, you may recall the Cartesian coordinate system (X,

Y, Z) and the spherical coordinate system (r,

theta, phi). I bring this up because, if we assume that the LiDAR is at the

origin in a spherical system, then we just need to know the horizontal angle

(theta), the vertical angle (phi), and the distance (r) to build our 3D model.

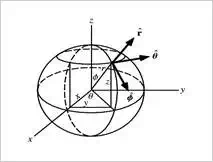

Representation of a spherical coordinate

system. Image courtesy of Wolfram MathWorld.

With only one source, the 3D

model would be a single surface. But if we combine multiple surfaces mapped

together, you can get a full 3D model. So we need one laser and one sensor to

make our image using the spherical coordinate system.

Some LiDAR systems are mobile

and use GPS or other positioning systems to map all the readings together into

a single image. These mobile systems still use the same principles but they may

be applied in different ways.

How do we actually change our laser's

angles quickly?

We have two million

"pixels" to measure. How can we possibly adjust our laser to measure

them all?

There are only 86,400 seconds

in a day, so working with any length of time over thousandths of a second to

measure and adjust is probably too long to be practical.

So how do they adjust the

pixel so fast? The key is that they never stop adjusting it, using a spinning

mirror or prism rotating at a very precise and well-known speed. The laser is

reflected off of that mirror or prism so that its position is constantly

changing but at a known rate. This makes it very fast and easy to adjust one of

our angles, either phi or theta, to scan across our image. For scanning the

other angle, we could use a much slower system such as a precision stepper motor.

But we still have one last

major problem to solve.

How do we measure the distance?

There are multiple methods of

measuring distance with a laser, depending on what the system is trying to

achieve. And a single system may be using multiple methods simultaneously to

increase accuracy. All methods require very precise equipment.

The simplest to understand is

time of flight (ToF). This is also most often listed

as the method used to measure distance with a laser. If you calculate the time

it takes for light to travel 2mm, the distance needed for 1mm resolution, you

get the time 6.67 picoseconds. That requires some very precise equipment to

measure, but can be done for a price.

Another method,

triangulation, uses a second spinning mirror

to redirect the receiver signal; by measuring the change in the angle, you can

get a distance. Triangulation may not be applicable for long distances, and the

use of the rotating mirror may complicate the system.

Finally, by modulating the

laser, it is possible to measure a phase shift in the laser modulation. Because

of the periodic nature of the modulation it cannot by itself be used to measure

the distance. Rather, it produces a list of possible distances that can be used

in addition to another method to increase the accuracy.

LiDAR rendering of a group of people.

Image courtesy of NASA.

The LiDAR Laser

One last thing to talk about:

the laser itself. Despite the name, most LiDAR systems employ infrared rather

than visible radiation.

Because electromagnetic

radiation's interaction with matter is governed by wavelength, some wavelengths

work better for certain applications. Someday microwave (maser) or x-ray (xaser) lasers could be used to build LiDAR systems that can

image through a wide variety of materials, greatly enhancing their usefulness.