Memory Management

In a computer, there may be multiple processes executing at the same time. Every process that needs to execute, requires a certain amount of memory. Memory management is one of the tasks handled by the operating system. Memory management schemes handle the allocation of memory to different processes. On completion of process execution, the memory is de-allocated and made available to another process. Additionally, different processes that have been allocated memory should not interfere into each other’s memory space. This requires some memory protection and sharing mechanism. Now we will discuss memory allocation, de-allocation, re- allocation of free memory, and memory protection and sharing.

Memory Allocation

In single-user and single-task operating system like MS-DOS, only one process can execute at a time. After the termination of the process, the allocated memory is freed and is made available to any other process.

In a multiprogramming system, in addition to allocation and de-allocation of memory, more tasks are involved like keeping track of processes allocated to the memory, memory protection and sharing etc.

There are different memory allocation schemes to allocate memory to the processes that reside in memory at the same time. The different memory allocation schemes are as follows:

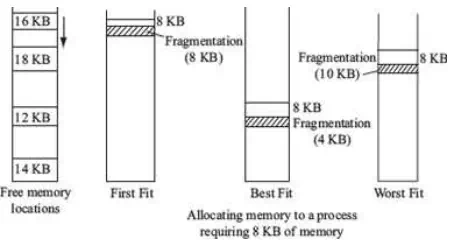

Multiple Partition Allocation—The operating system keeps track of blocks of memory which are free and those which are unavailable. The single block of available memory is called a hole. When a process requires memory, a hole large enough for the process is allocated. As different processes release the memory, the released block of memory is placed in the set of holes. During allocation of memory, the set of holes is searched to determine which hole is to be allocated. For this, three-hole allocation strategies are used—(1) first-fit (allocate the first hole that is big enough for the process, (2) best-fit (allocate the smallest hole that is big enough for the process, and (3) worst-fit (allocate the largest available hole). Memory allocated using any of these strategies results in fragmentation. When the processes are allocated memory and removed from memory, the free memory is broken into small pieces. These small pieces of fragmented memory lie unused. Paging scheme is used to overcome fragmentation. Figure shows allocation of memory to a process requiring 8 KB of memory, using the first fit, best fit, and worst fit allocation strategies.

Multiple partition memory allocation

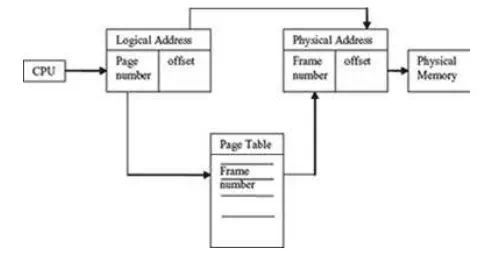

Paging—In paging, the physical memory is broken into fixed size blocks called frames. This is the primary memory allocated to the process. The logical memory is broken into blocks of the same size called pages. Generally pages are of sizes varying from 1 KB to 8 KB. When a process is executed, its pages are loaded into the frames.

An address generated by CPU has two parts—page number and page offset. A page table is maintained by the operating system that maps the page number to the frame number. The page number is used to index the page table and get the frame number. The page offset is added to the page frame number to get the physical memory address.

Paging

Paging handles the problem of fragmentation. The frames need not be contiguous and can reside anywhere in the memory. A process can be allocated memory from holes created by fragmentation, which may be anywhere in the memory.

Virtual Memory

· In the memory management schemes discussed in the previous section, the whole process is kept in memory before the execution starts. However, for some applications, large memory is required to run the applications, and the whole program cannot be loaded into the memory.

· Virtual memory allows the execution of those processes that are not completely in memory.

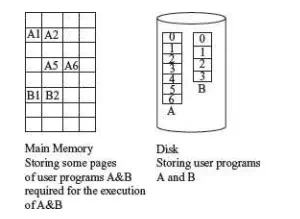

· Virtual memory is commonly implemented by demand paging. Demand paging is similar to paging with swapping. Swapping is transferring of block of data from the on-line secondary storage like hard disk to the memory and vice versa.

· In demand paging, the processes reside in the online secondary memory. When a process executes, and a page is required, that page is swapped-in into the memory (Figure). This allows execution of large-sized programs without loading them completely into the memory.

Virtual memory