Process Management

· A process is a program in a state of execution. It is a unit of work for the operating system. A process can be created, executed, and stopped. In contrast, a program is always static and does not have any state. A program may have two or more processes running. A process and a program are, thus, two different entities.

· To accomplish a task, a process needs to have access to different system resources like I/O devices, CPU, memory etc. The process management function of an operating system handles allocation of resources to the processes in an efficient manner. The allocation of resources required by a process is made during process creation and process execution.

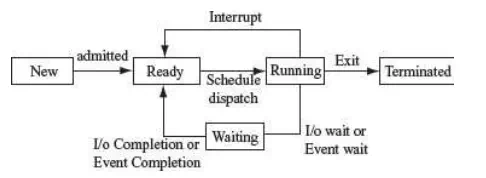

· A process changes its state as it is executed. The various states that a process changes during execution are as follows:

Process states

a. New—process is in a new state when it is created,

b. Ready—process is in ready state when it is waiting for a processor,

c. Running—process is in running state if processor is executing the process,

d. Waiting—process is in waiting state when it waits for some event to happen (I/O etc), and

e. Terminated—process that has finished execution is in terminated state.

· A system consists of collection of processes—(1) system process that execute system code, and (2) user process that execute user code. OS mainly handles the execution of user code, though it may also handle various system processes.

The concurrent execution of the process requires process synchronization and CPU scheduling. The CPU scheduling, process synchronization, communication, and deadlock situations are described in the following subsections.

CPU Scheduling

· CPU or processor is one of the primary computer resources. All computer resources like I/O, memory, and CPU are scheduled for use.

· CPU scheduling is important for the operating system. In a multiprogramming and time-sharing system, the processor executes multiple processes by switching the CPU among the processes, so that no user has to wait for long for a program to execute. To enable running of several concurrent processes, the processor time has to be distributed amongst all the processes efficiently.

· Scheduler is a component of the operating system that is responsible for scheduling transition of processes. At any one time, only one process can be in running state and the rest are in ready or waiting state. The scheduler assigns the processor to different processes in a manner so that no one process is kept waiting for long

· Scheduling can be non-pre-emptive scheduling or pre-emptive scheduling. In non-pre- emptive scheduling, the processor executes a process till termination without any interruption. Hence the system resources are not used efficiently. In pre-emptive scheduling, a running process may be interrupted by another process that needs to execute. Pre-emption allows the operating system to interrupt the executing task and handle any important task that requires immediate action. In pre-emptive scheduling, the system resources are used efficiently.

· There are many different CPU scheduling algorithms that are used to schedule the processes. Some of the common CPU scheduling algorithms are as follows—

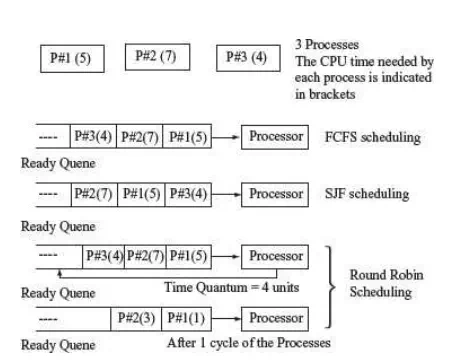

· First Come First Served (FCFS) Scheduling: As the name says, the process that requests for the CPU first, gets the CPU first. A queue is maintained for the processes requesting the CPU. The process first in the queue is allocated the CPU first. FCFS scheduling is non-pre-emptive. The drawback of this scheduling algorithm is that the process that is assigned to the CPU may take long time to complete, keeping all other processes waiting in the queue, even if they require less CPU time.

· Shortest Job First (SJF) Scheduling: The process that requires the least CPU time is allocated the CPU first. SJF scheduling is non-pre-emptive. The drawback of this scheduling is that a process that requires more CPU time may have to wait for long time, since processes requiring less CPU time will be assigned the CPU first.

· Round Robin (RR) Scheduling: It is designed for time-sharing systems. RR scheduling is pre-emptive. In this scheduling, a small quantum of time (10—100 ms) is defined, and each process in the queue is assigned the CPU for this quantum of time circularly. New processes are added at the tail of the queue and the process that has finished execution is removed from the queue. RR scheduling overcomes the disadvantage of FCFS and SJF scheduling. A process does not have to wait for long, if it is not the first one in the queue, or, if it requires CPU for a long period of time.

Figure shows the ready queue—P#1 with CPU time requirement of 5 units, P#2 with 7 units and P#3 with 4 units, are scheduled, using the different CPU scheduling algorithms.

Process Synchronization

In a computer, multiple processes are executing at the same time. The processes that share the resources have to communicate with one another to prevent a situation where one process disrupts another process.

Illustrating CPU scheduling algorithms with an example

· When two or more processes execute at the same time, independent of each other, they are called concurrent processes.

· A situation where multiple processes access and manipulate the same data concurrently, in which the final result depends on the order of process execution, is called a race condition. To handle such situations, synchronization and coordination of the processes is required.

Deadlock

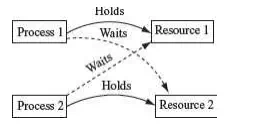

· In a multiprogramming environment, multiple processes may try to access a resource. A deadlock is a situation when a process waits endlessly for a resource and the requested resource is being used by another process that is waiting for some other resource.

· A deadlock arises when the four necessary conditions hold true simultaneously in a system. These conditions are as follows:

Deadlock

· Mutual Exclusion—Only one process at a time can use the resource. Any other process requesting the resource has to wait until the resource is released

· No Pre-emption—A process releases the resource by itself. A process cannot remove the resource from another process.

· Hold and Wait—A process holds a resource while requesting another resource, which may be currently held by another process.

· Circular Wait—In this situation, a process P1 waits for a resource held by another process P2, and the process P2 waits for a resource held by process P1.

Deadlock handling can be done by deadlock avoidance and deadlock prevention. o

· Deadlock Prevention is a set of method that ensures that at least one of the above four necessary conditions required for deadlock, does not hold true.

· Deadlock Avoidance requires that the operating system be given information in advance regarding the resources a process will request and use. This information is used by the operating system to schedule the allocation of resources so that no process waits for a resource.