Composing Jazz Music with Deep Learning

Deep Learning is on the rise, extending its application in every field, ranging from computer vision to natural language processing, healthcare, speech recognition, generating art, addition of sound to silent movies, machine translation, advertising, self-driving cars, etc. In this blog, we will extend the power of deep learning to the domain of music production. We will talk about how we can use deep learning to generate new musical beats.

The current technological advancements have transformed the way we produce music, listen, and work with music. With the advent of deep learning, it has now become possible to generate music without the need for working with instruments artists may not have had access to or the skills to use previously. This offers artists more creative freedom and ability to explore different domains of music.

Recurrent Neural Networks

Since music is a sequence of notes and chords, it doesn’t have a fixed dimensionality. Traditional deep neural network techniques cannot be applied to generate music as they assume the inputs and targets/outputs to have fixed dimensionality and outputs to be independent of each other. It is therefore clear that a domain-independent method that learns to map sequences to sequences would be useful.

Recurrent neural networks (RNNs) are a class of artificial neural networks that make use of sequential information present in the data.

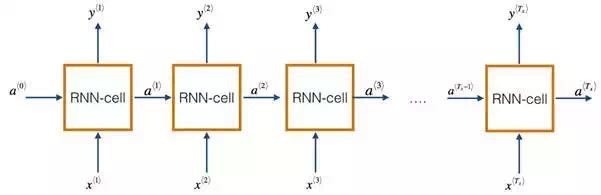

Fig. 1 A basic RNN unit.

A recurrent neural network has looped, or recurrent, connections which allow the network to hold information across inputs. These connections can be thought of as memory cells. In other words, RNNs can make use of information learned in the previous time step. As seen in Fig. 1, the output of the previous hidden/activation layer is fed into the next hidden layer. Such an architecture is efficient in learning sequence-based data.

In this blog, we will be using the Long Short-Term Memory (LSTM) architecture. LSTM is a type of recurrent neural network (proposed by Hochreiter and Schmidhuber, 1997) that can remember a piece of information and keep it saved for many timesteps.

Dataset

Our dataset includes piano tunes stored in the MIDI format. MIDI (Musical Instrument Digital Interface) is a protocol which allows electronic instruments and other digital musical tools to communicate with each other. Since a MIDI file only represents player information, i.e., a series of messages like ‘note on’, ‘note off, it is more compact, easy to modify, and can be adapted to any instrument.

Before we move forward, let us understand some music related terminologies:

· Note: A note is either a single sound or its representation in notation. Each note consist of pitch, octave, and an offset.

· Pitch: Pitch refers to the frequency of the sound.

· Octave: An octave is the interval between one musical pitch and another with half or double its frequency.

· Offset: Refers to the location of the note.

· Chord: Playing multiple notes at the same time constitutes a chord.