Object detection for self-driving cars – Part 3

Model Architecture

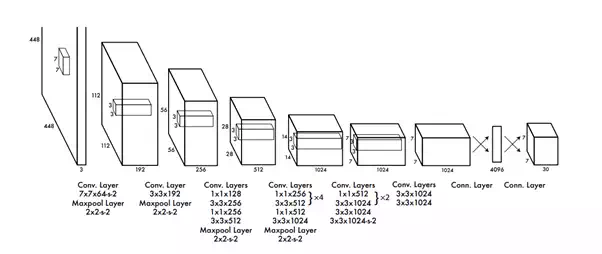

The YOLO model has the following architecture (see Fig 3). The network has 24 convolutional layers followed by two fully connected layers. Alternating 1 × 1 convolutional layers reduce the features space from preceding layers. The convolutional layers are pretrained on the ImageNet classification task at half the resolution (224 × 224 input image) and then double the resolution for detection.

Fig 3. The YOLO Archtecture (Image taken from the official YOLO paper)

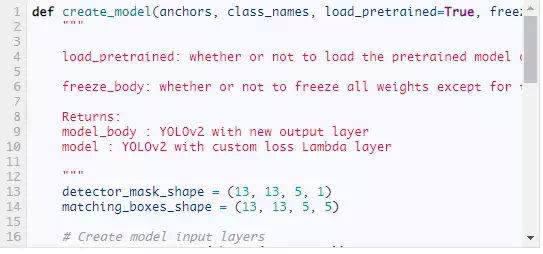

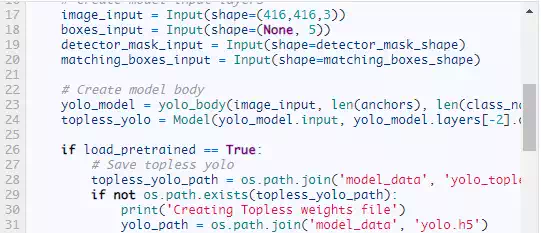

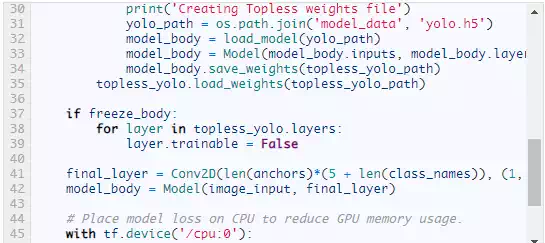

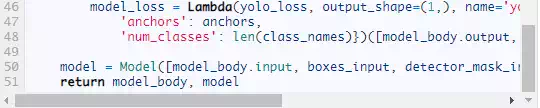

We will be using pre trained YOLOv2 model, which has been trained on the COCO image dataset with classes similar to the Berkeley Driving Dataset. So, we will use the YOLOv2 pretrained network as a feature extractor. We will load the pretrained weights of the YOLOv2 model and will freeze all the weights except for the last layer during training of the model. We will remove the last convolutional layer of the YOLOv2 model and replace it with a new convolutional layer indicating the number of classes (8 classes as defined earlier) to be predicted. This is implemented in the following code.

Due to limited computational power, we used only the first 1000 images present in the training dataset to train the model. Finally, we trained the model for 20 epochs and saved the model weights with the lowest loss.