Object detection for self-driving cars – Part 2

Defining the Model

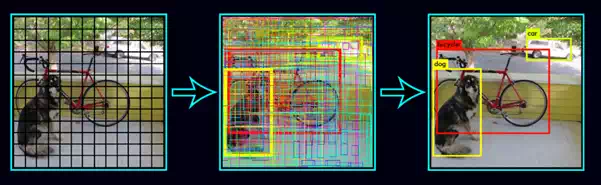

Instead of building the model from scratch, we will be using a pre-trained network and applying transfer learning to create our final model. You only look once (YOLO) is a state-of-the-art, real-time object detection system, which has a mAP on VOC 2007 of 78.6% and a mAP of 48.1% on the COCO test-dev. YOLO applies a single neural network to the full image. This network divides the image into regions and predicts the bounding boxes and probabilities for each region. These bounding boxes are weighted by the predicted probabilities.

One of the advantages of YOLO is that it looks at the whole image during the test time, so its predictions are informed by global context in the image. Unlike R-CNN, which requires thousands of networks for a single image, YOLO makes predictions with a single network. This makes this algorithm extremely fast, over 1000x faster than R-CNN and 100x faster than Fast R-CNN.

Loss Function

If the target variable y is defined as

(3)y=[pcbxbybhbwc1c2…c8]Ty1y2y3y4y5y6y7…y13

the loss function for object localization is defined as

(4)L(y^,y)={(y1^–y1)2+(y2^–y2)2+…+(y13^–y13)2,y1=1(y1^–y1)2,y1=0

The loss function in case of the YOLO algorithm is calculated using the following steps:

· Find the bounding boxes with the highest IoU with the true bounding boxes

· Calculate the confidence loss (the probability of object being present inside the bounding box)

· Calculate the classification loss (the probability of class present inside the bounding box)

· Calculate the coordinate loss for the matching detected boxes.

· Total loss is the sum of the confidence loss, classification loss, and coordinate loss.

Using the steps defined above, let’s calculate the loss function for the YOLO algorithm.

In general, the target variable is defined as

(5)y=[pi(c)xiyihiwiCi]T

where,

pi(c) : Probability/confidence of

an object being present in the bounding box.

xi, yi : coordinates of the center of the bounding

box.

wi : width of the bounding box w.r.t the image width.

hi : height of the bounding box w.r.t the image height.

Ci = Probability of the ith class.

then the

corresponding loss function is calculated as

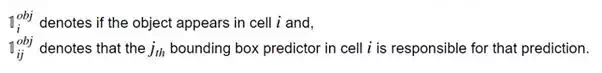

where,

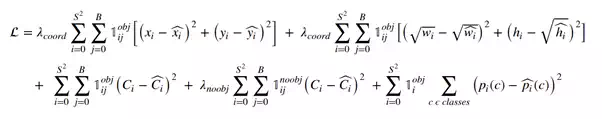

The above equation represents the yolo loss function. The equation may seem daunting at first, but on having a closer look we can see it is the sum of the coordinate loss, the classification loss, and the confidence loss in that order. We use sum of squared errors because it is easy to optimize. However, it weights the localization error equally with classification error which may not be ideal. To remedy this, we increase the loss from bounding box coordinate predictions and decrease the loss from confidence predictions for boxes that don’t contain objects. We use two parameters, λcoord and λnoobj to accomplish this.

Note that the loss function only penalizes classification error if an object is present in that grid cell. It also penalizes the bounding box coordinate error if that predictor is responsible for the ground truth box (i.e which has the highest IOU of any predictor in that grid cell).