Let us first consider what kinds of knowledge might need to be represented in AI systems:

Objects

-- Facts about objects in our world domain. e.g. Guitars have strings, trumpets are brass instruments.

Events

-- Actions that occur in our world. e.g. Steve Vai played the guitar in Frank Zappa's Band.

Performance

-- A behavior like playing the guitar involves knowledge about how to do things.

Meta-knowledge

-- knowledge about what we know. e.g. Bobrow's Robot who plan's a trip. It knows that it can read street signs along the way to find out where it is.

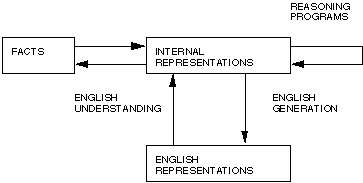

Thus in solving problems in AI we must represent knowledge and there are two entities to deal with:

Facts

-- truths about the real world and what we represent. This can be regarded as the knowledge level

Representation of the facts

which we manipulate. This can be regarded as the symbol level since we usually define the representation in terms of symbols that can be manipulated by programs.

We can structure these entities at two levels

the knowledge level

-- at which facts are described

the symbol level

-- at which representations of objects are defined in terms of symbols that can be manipulated in programs (see Fig. 5)

Fig 5 Two Entities in Knowledge Representation

English or

natural language is an obvious way of representing and handling facts. Logic

enables us to consider the following fact: spot is a dog as dog(spot) We

could then infer that all dogs have tails with: ![]() : dog(x)

: dog(x) ![]() hasatail(x) We

can then deduce:

hasatail(x) We

can then deduce:

hasatail(Spot)

Using an appropriate backward mapping function the English sentence Spot has a tail can be generated.

The available functions are not always one to one but rather are many to many which is a characteristic of English representations. The sentences All dogs have tails and every dog has a tail both say that each dog has a tail but the first could say that each dog has more than one tail try substituting teeth for tails. When an AI program manipulates the internal representation of facts these new representations should also be interpretable as new representations of facts.

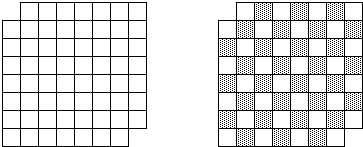

Consider the classic problem of the mutilated chess board. Problem In a normal chess board the opposite corner squares have been eliminated. The given task is to cover all the squares on the remaining board by dominoes so that each domino covers two squares. No overlapping of dominoes is allowed, can it be done. Consider three data structures

Fig. 3.1 Mutilated Checker

the first two are illustrated in the diagrams above and the third data structure is the number of black squares and the number of white squares. The first diagram loses the colour of the squares and a solution is not east to see; the second preserves the colours but produces no easier path whereas counting the number of squares of each colour giving black as 32 and the number of white as 30 yields an immediate solution of NO as a domino must be on one white square and one black square, thus the number of squares must be equal for a positive solution.

Using Knowledge

We have briefly mentioned where knowledge is used in AI systems. Let us consider a little further to what applications and how knowledge may be used.

Learning

-- acquiring knowledge. This is more than simply adding new facts to a knowledge base. New data may have to be classified prior to storage for easy retrieval, etc.. Interaction and inference with existing facts to avoid redundancy and replication in the knowledge and and also so that facts can be updated.

Retrieval

-- The representation scheme used can have a critical effect on the efficiency of the method. Humans are very good at it.

Many AI methods have tried to model human (see lecture on distributed reasoning)

Reasoning

-- Infer facts from existing data.

If a system on only knows:

- Miles Davis is a Jazz Musician.

- All Jazz Musicians can play their instruments well.

If things like Is Miles Davis a Jazz Musician? or Can Jazz Musicians play their instruments well? are asked then the answer is readily obtained from the data structures and procedures.

However a question like Can Miles Davis play his instrument well? requires reasoning.

The above are all related. For example, it is fairly obvious that learning and reasoning involve retrieval etc.

Properties for Knowledge Representation Systems

The following properties should be possessed by a knowledge representation system.

Representational Adequacy

-- the ability to represent the required knowledge;

Inferential Adequacy

- the ability to manipulate the knowledge represented to produce new knowledge corresponding to that inferred from the original;

Inferential Efficiency

- the ability to direct the inferential mechanisms into the most productive directions by storing appropriate guides;

Acquisitional Efficiency

- the ability to acquire new knowledge using automatic methods wherever possible rather than reliance on human intervention.

To date no single system optimises all of the above